# A collection of R packages designed for data science

library(tidyverse)

# Converts stats functions to a tidyverse-friendly format

library(rstatix)

# Creates diagnostic plots using ggplot2

library(ggResidpanel)10 ANOVA

Questions

- How do I analyse multiple samples of continuous data?

- What is an ANOVA?

- How do I check for differences between groups?

Objectives

- Be able to perform an ANOVA in R

- Understand the ANOVA output and evaluate the assumptions

- Understand what post-hoc testing is and how to do this in R

10.1 Purpose and aim

Analysis of variance or ANOVA is a test than can be used when we have multiple samples of continuous response data. Whilst it is possible to use ANOVA with only two samples, it is generally used when we have three or more groups. It is used to find out if the samples came from parent distributions with the same mean. It can be thought of as a generalisation of the two-sample Student’s t-test.

10.2 Libraries and functions

10.2.1 Libraries

10.2.2 Functions

# Computes summary statistics

rstatix::get_summary_stats()

# Perform Tukey's range test

rstatix::tukey_hsd()

# Creates diagnostic plots

ggResidpanel::resid_panel()

# Fits a linear model

stats::lm()

# Carries out an ANOVA on a linear model

stats::anova()

# Performs a Shapiro-Wilk test for normality

stats::shapiro.test()10.2.3 Libraries

# A Python data analysis and manipulation tool

import pandas as pd

# Simple yet exhaustive stats functions.

import pingouin as pg

# Python equivalent of `ggplot2`

from plotnine import *

# Statistical models, conducting tests and statistical data exploration

import statsmodels.api as sm

# Convenience interface for specifying models using formula strings and DataFrames

import statsmodels.formula.api as smf10.2.4 Functions

# Summary statistics

pandas.DataFrame.describe()

# Plots the first few rows of a DataFrame

pandas.DataFrame.head()

# Query the columns of a DataFrame with a boolean expression

pandas.DataFrame.query()

# Reads in a .csv file

pandas.read_csv

# Performs an analysis of variance

pingouin.anova()

# Tests for equality of variance

pingouin.homoscedasticity()

# Performs the Shapiro-Wilk test for normality

pingouin.normality()

# Creates a model from a formula and data frame

statsmodels.formula.api.ols

# Creates an ANOVA table for one or more fitted linear models

statsmodels.stats.anova.anova_lm10.3 Data and hypotheses

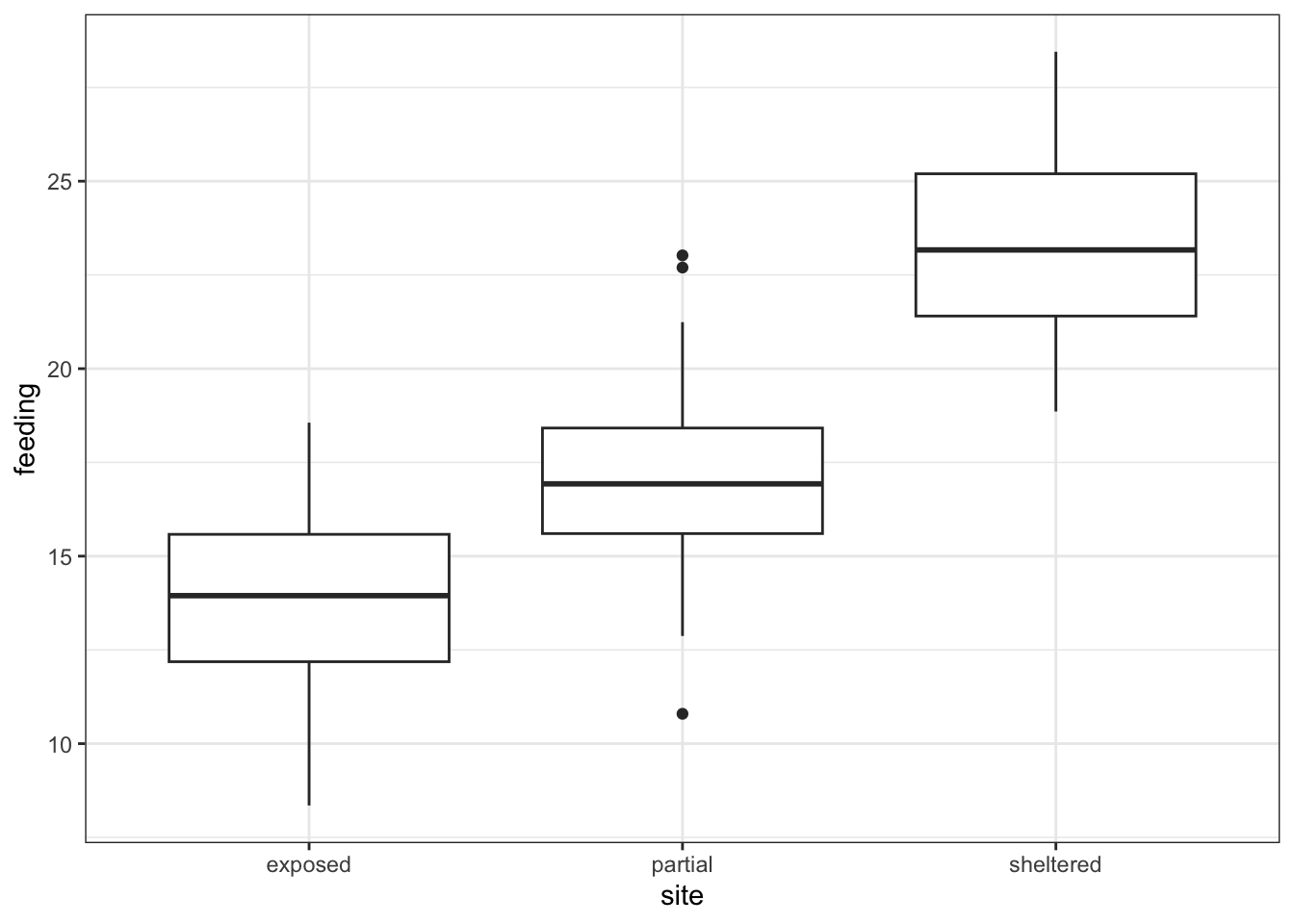

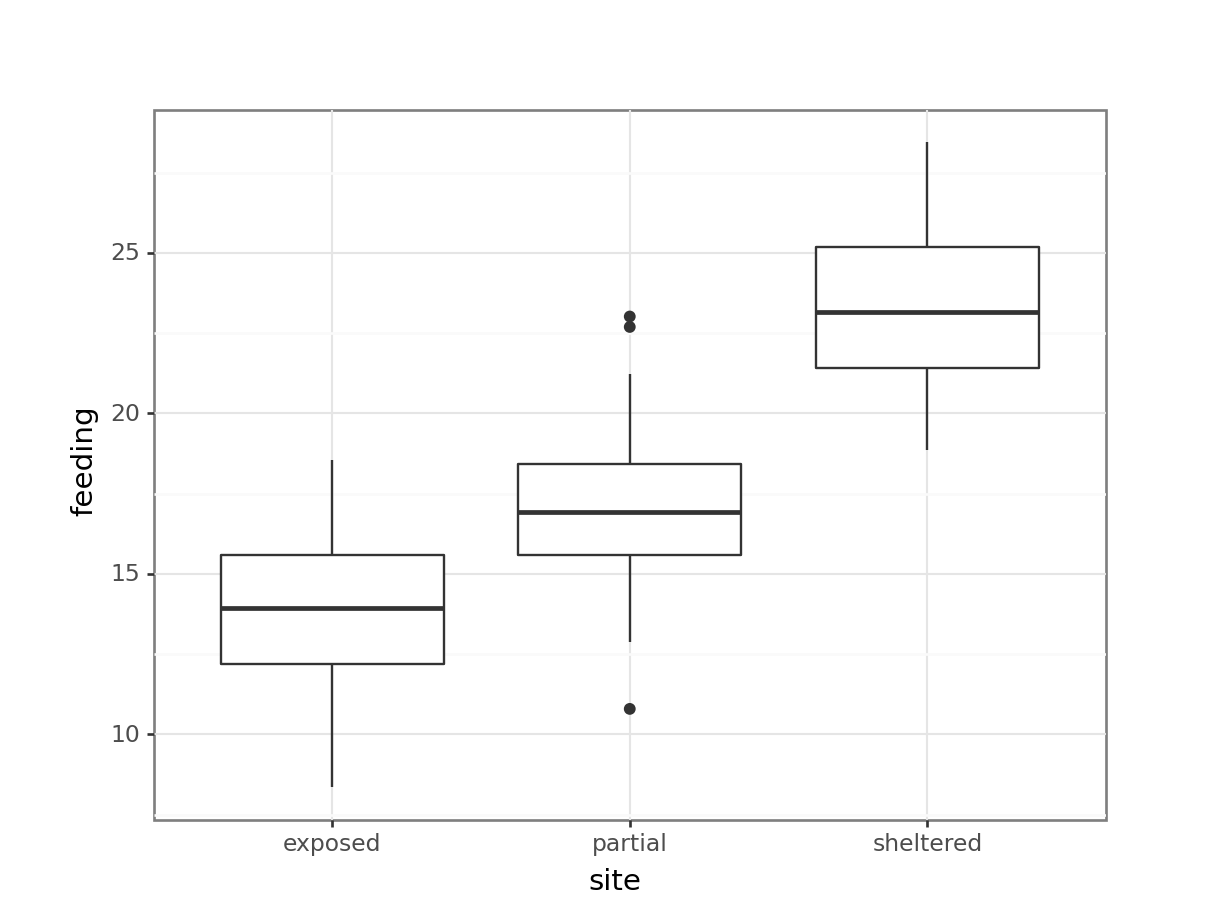

For example, suppose we measure the feeding rate of oyster catchers (shellfish per hour) at three sites characterised by their degree of shelter from the wind, imaginatively called exposed (E), partially sheltered (P) and sheltered (S). We want to test whether the data support the hypothesis that feeding rates don’t differ between locations. We form the following null and alternative hypotheses:

- \(H_0\): The mean feeding rates at all three sites is the same \(\mu E = \mu P = \mu S\)

- \(H_1\): The mean feeding rates are not all equal.

We will use a one-way ANOVA test to check this.

- We use a one-way ANOVA test because we only have one predictor variable (the categorical variable location).

- We’re using ANOVA because we have more than two groups and we don’t know any better yet with respect to the exact assumptions.

The data are stored in the file data/CS2-oystercatcher-feeding.csv.

10.4 Summarise and visualise

First we read in the data.

# load data

oystercatcher <- read_csv("data/CS2-oystercatcher-feeding.csv")

# and have a look

oystercatcher# A tibble: 120 × 2

site feeding

<chr> <dbl>

1 exposed 12.2

2 exposed 13.1

3 exposed 17.9

4 exposed 13.9

5 exposed 14.1

6 exposed 18.4

7 exposed 15.0

8 exposed 10.3

9 exposed 11.8

10 exposed 12.5

# ℹ 110 more rowsThe oystercatcher data set contains two columns:

- a

sitecolumn with information on the amount of shelter of the feeding location - a

feedingcolumn containing feeding rates

Next, we get some basic descriptive statistics:

# get some basic descriptive statistics

oystercatcher %>%

group_by(site) %>%

get_summary_stats(type = "common")# A tibble: 3 × 11

site variable n min max median iqr mean sd se ci

<chr> <fct> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl>

1 exposed feeding 40 8.35 18.6 13.9 3.40 13.8 2.44 0.386 0.781

2 partial feeding 40 10.8 23.0 16.9 2.82 17.1 2.62 0.414 0.838

3 sheltered feeding 40 18.9 28.5 23.2 3.79 23.4 2.42 0.383 0.774Finally, we plot the data by site:

# plot the data

ggplot(oystercatcher,

aes(x = site, y = feeding)) +

geom_boxplot()

First, we read in the data.

# load the data

oystercatcher_py = pd.read_csv("data/CS2-oystercatcher-feeding.csv")

# and have a look

oystercatcher_py.head() site feeding

0 exposed 12.175506

1 exposed 13.073917

2 exposed 17.939687

3 exposed 13.891783

4 exposed 14.051663The oystercatcher_py data set contains two columns:

- a

sitecolumn with information on the amount of shelter of the feeding location - a

feedingcolumn containing feeding rates

Next, we get some basic descriptive statistics per group. Here we use the pd.groupby() function to group by site. We only want to have summary statistics for the feeding variable, so we specify that as well:

oystercatcher_py.groupby("site")["feeding"].describe() count mean std ... 50% 75% max

site ...

exposed 40.0 13.822899 2.441974 ... 13.946420 15.581748 18.560404

partial 40.0 17.081666 2.619906 ... 16.927683 18.416708 23.021250

sheltered 40.0 23.355503 2.419825 ... 23.166246 25.197096 28.451252

[3 rows x 8 columns]Finally, we plot the data:

# plot the data

(ggplot(oystercatcher_py,

aes(x = "site",

y = "feeding")) +

geom_boxplot())

Looking at the data, there appears to be a noticeable difference in feeding rates between the three sites. We would probably expect a reasonably significant statistical result here.

10.5 Assumptions

To use an ANOVA test, we have to make three assumptions:

- The parent distributions from which the samples are taken are normally distributed

- Each data point in the samples is independent of the others

- The parent distributions should have the same variance

In a similar way to the two-sample tests we will consider the normality and equality of variance assumptions both using tests and by graphical inspection (and ignore the independence assumption).

10.5.1 Normality

First we perform a Shapiro-Wilk test on each site separately.

We take the data, filter for each type of site, extract the feeding rates and send those data to the shapiro.test() function.

# Shapiro-Wilk test on each site

oystercatcher %>%

filter(site == "exposed") %>%

pull(feeding) %>%

shapiro.test()

Shapiro-Wilk normality test

data: .

W = 0.98859, p-value = 0.953oystercatcher %>%

filter(site == "partial") %>%

pull(feeding) %>%

shapiro.test()

Shapiro-Wilk normality test

data: .

W = 0.98791, p-value = 0.9398oystercatcher %>%

filter(site == "sheltered") %>%

pull(feeding) %>%

shapiro.test()

Shapiro-Wilk normality test

data: .

W = 0.97511, p-value = 0.5136We use the pg.normality() function to calculate the statistic. This requires:

- the

dvdependent variable (feedingin our case) - the

groupvariable (site) - and some data

pg.normality(dv = "feeding",

group = "site",

data = oystercatcher_py) W pval normal

exposed 0.988586 0.953029 True

partial 0.987907 0.939833 True

sheltered 0.975106 0.513547 TrueWe can see that all three groups appear to be normally distributed which is good.

For ANOVA however, considering each group in turn is often considered quite excessive and, in most cases, it is sufficient to consider the normality of the combined set of residuals from the data. We’ll explain residuals properly in the next session, but effectively they are the difference between each data point and its group mean.

To get hold of these residuals, we need to create a linear model. Again, this will be explained in more detail in the next section. For now, we see it as a way to describe the relationship between the feeding rate and the site.

So, we create a linear model, extract the residuals and check their normality:

We use the lm() function to define the linear model that describes the relationship between feeding and site. The notation is similar to what we used previously when we were dealing with two samples of data.

# define the model

lm_oystercatcher <- lm(feeding ~ site,

data = oystercatcher)We can read this as “create a linear model (lm) where the feeding rate (feeding) depends on the site (site), using the oystercatcher data”.

We store the output of that in an object called lm_oystercatcher. We’ll look into what this object contains in more detail in later sections.

For now, we extract the residuals from this object using the residuals() function and then use this in the shapiro.test() function.

# extract the residuals

resid_oyster <- residuals(lm_oystercatcher)

# perform Shapiro-Wilk test on residuals

shapiro.test(resid_oyster)

Shapiro-Wilk normality test

data: resid_oyster

W = 0.99355, p-value = 0.8571Unfortunately pingouin does not have a straightforward way of extracting residuals (if you know more, please let me know!).

To get our residuals we use statsmodels, a module that provides functions for statistical models. We’ll be using this in upcoming sessions, so you’ll have a head start!

At this point you shouldn’t concern yourself too much with the exact syntax, just run it an have a look.

We need to import a few extra modules. First, we load the statsmodels.api module, which contains an OLS() function (Ordinary Least Squares - the equivalent of the lm() function in R).

We also import stats.models.formula.api so we can use the formula notation in our linear model. We define the formula as formula = "feeding ~ C(site)" with C conveying that the site variable is a category. Lastly we can .fit() the model.

If you’re familiar with this stuff then you can look at the model itself by running summary(lm_oystercatcher_py). But we’ll cover all of this in later sessions.

We load the modules, define a linear model, create a fit() and we get the residuals from the linear model fit with .resid.

import statsmodels.api as sm

import statsmodels.formula.api as smf# create a linear model

model = smf.ols(formula= "feeding ~ C(site)", data = oystercatcher_py)

# and get the fitted parameters of the model

lm_oystercatcher_py = model.fit()# get the residuals from the model fit

# and perform Shapiro-Wilk test

pg.normality(lm_oystercatcher_py.resid) W pval normal

0 0.993545 0.857013 TrueAgain, we can see that the combined residuals from all three groups appear to be normally distributed (which is as we would have expected given that they were all normally distributed individually!)

10.5.2 Equality of variance

We now test for equality of variance using Bartlett’s test (since we’ve just found that all of the individual groups are normally distributed).

Perform Bartlett’s test on the data:

# check equality of variance

bartlett.test(feeding ~ site,

data = oystercatcher)

Bartlett test of homogeneity of variances

data: feeding by site

Bartlett's K-squared = 0.29598, df = 2, p-value = 0.8624Where the relevant p-value is given on the 3rd line. Here we see that each group appears to have comparable variance.

We use the homoscedasticity() function from pingouin (homoscedasticity is another way of describing equality of variance). The default method is levene, so we need to specify that we want to use bartlett.

pg.homoscedasticity(dv = "feeding",

group = "site",

method = "bartlett",

data = oystercatcher_py) T pval equal_var

bartlett 0.295983 0.862439 TrueWhere the relevant p-value is given in the pval column. Here we see that each group appears to have the same variance.

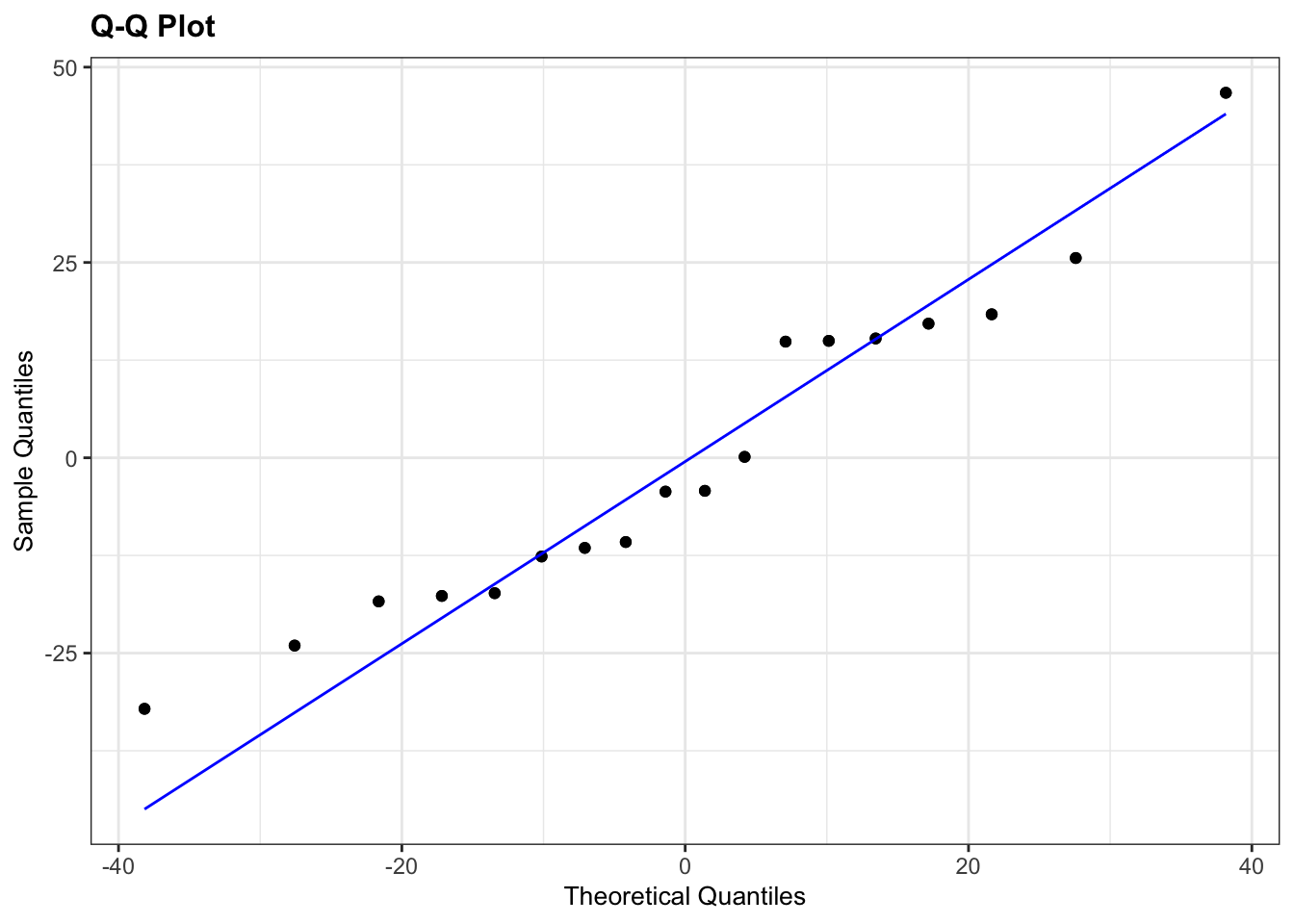

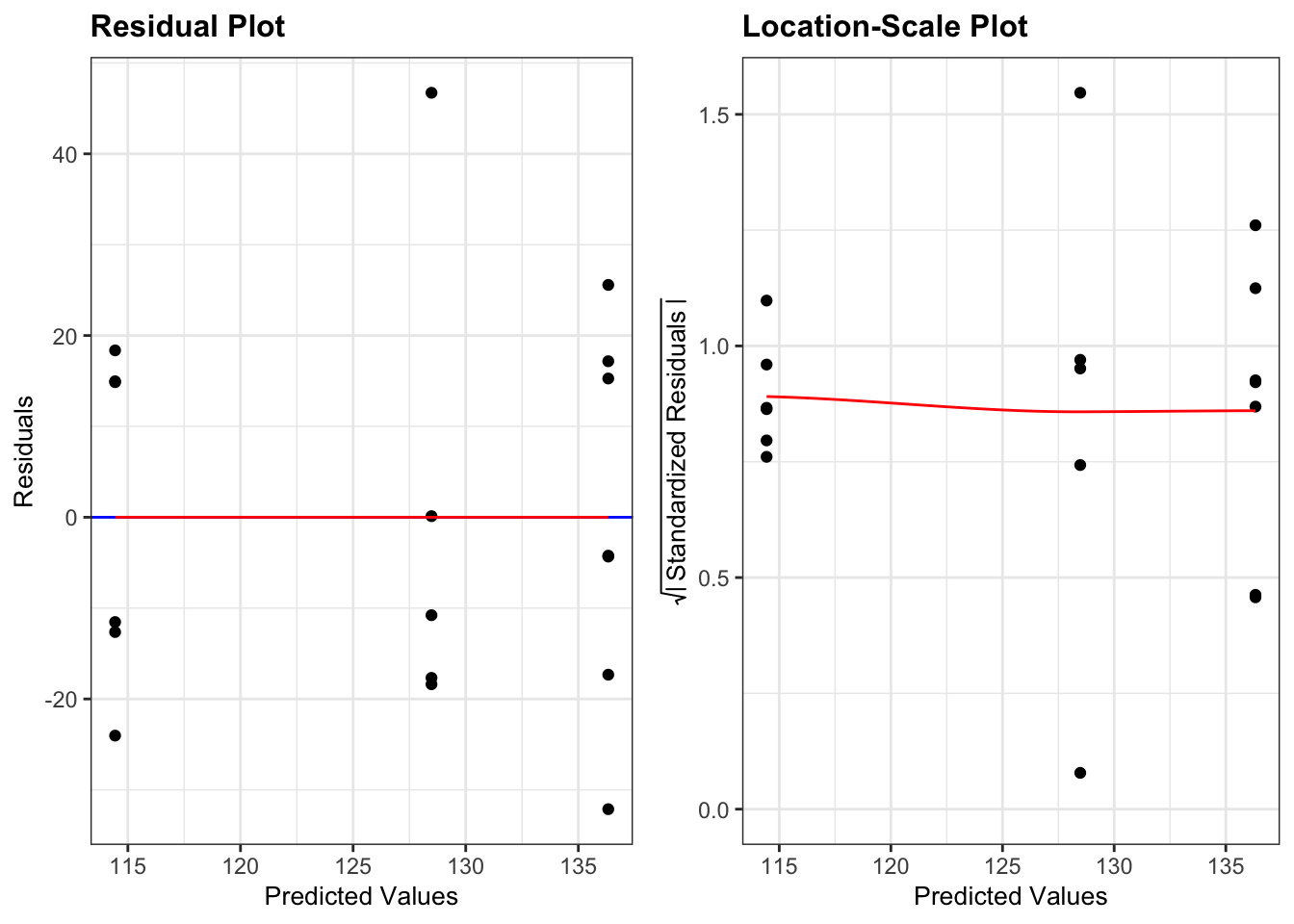

10.5.3 Graphical interpretation and diagnostic plots

Assessing assumptions via these tests can be cumbersome, but also a bit misleading at times. It reduces the answer to the question “is the assumption met?” to a yes/no, based on some statistic and associated p-value.

This does not convey that things aren’t always so clear-cut and that there is a lot of grey area that we need to navigate. As such, assessing assumptions through graphical means - using diagnostic plots - is often preferred.

In the first session we already created diagnostic Q-Q plots directly from our data, using stat_qq() and stat_qq_line(). For more specific plots this becomes a bit cumbersome. There is an option to create ggplot-friendly diagnostic plots, using the ggResidPanel package.

If you haven’t got ggResidpanel installed, please run the following code:

# install package

install.packages("ggResidpanel")

# load library

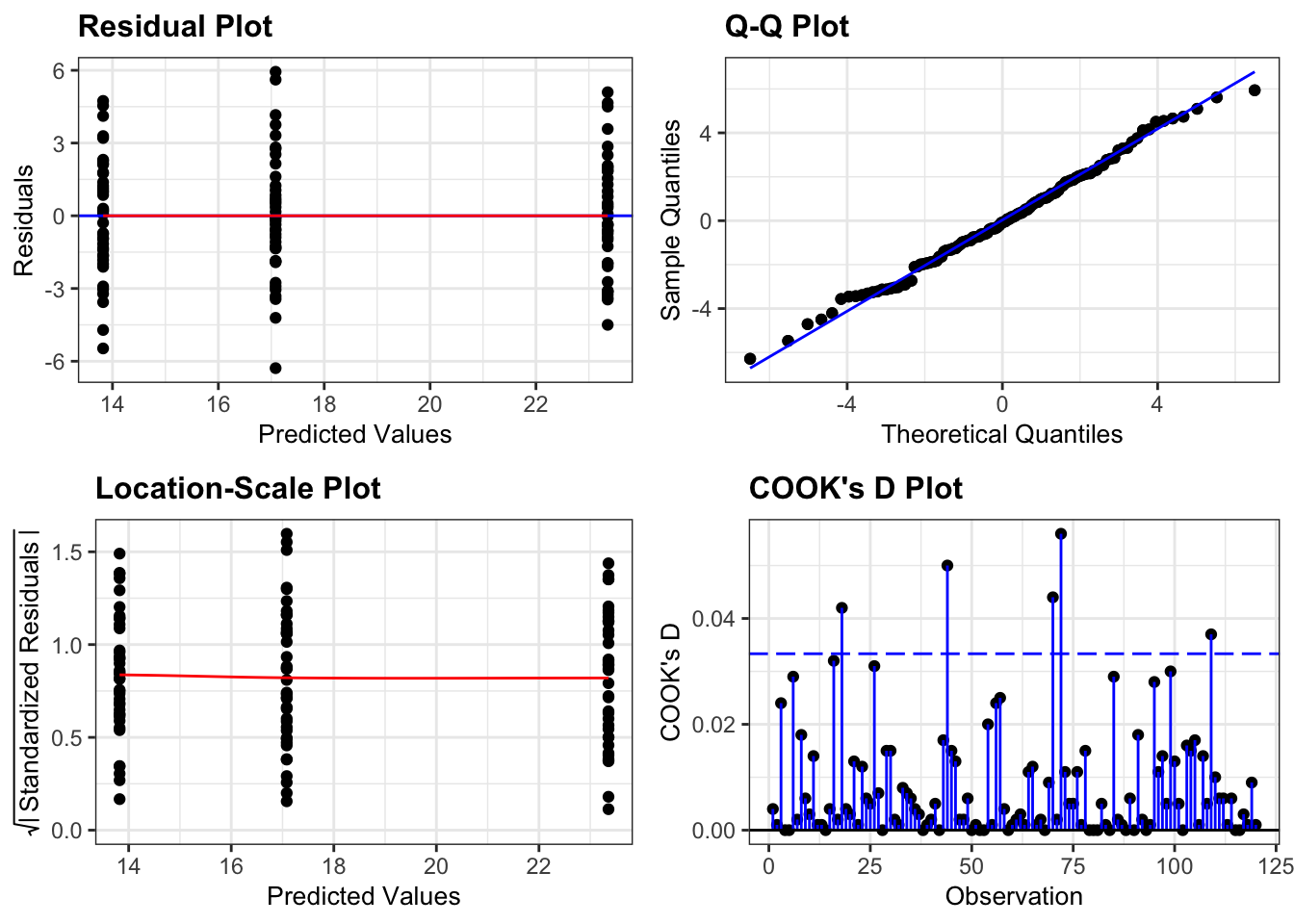

library(ggResidpanel)Let’s create the diagnostic plots we’re interested in using ggResidPanel. It takes a linear model object as input (lm_oystercatcher) and you can define which plots you want to see using the plots = argument. I have also added a smoother line (smoother = TRUE) to the plots, which we’ll use to compare against.

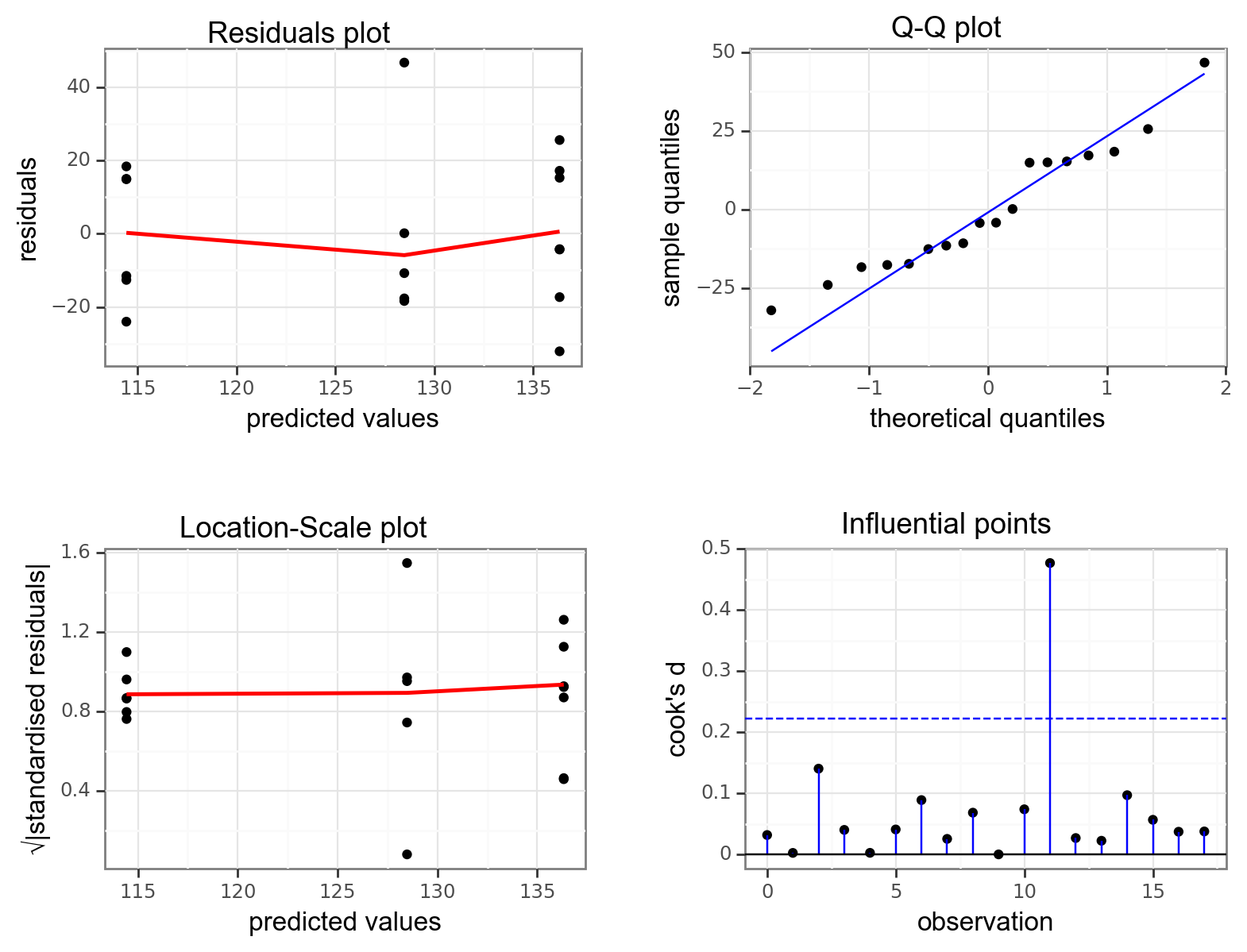

resid_panel(lm_oystercatcher,

plots = c("resid", "qq", "ls", "cookd"),

smoother = TRUE)

- The top left graph plots the Residuals plot. If the data are best explained by a linear line then there should be a uniform distribution of points above and below the horizontal blue line (and if there are sufficient points then the red line, which is a smoother line, should be on top of the blue line). This plot looks pretty good.

- The top right graph shows the Q-Q plot which allows a visual inspection of normality. If the residuals are normally distributed, then the points should lie on the diagonal blue line. This plot looks good.

- The bottom left Location-Scale graph allows us to investigate whether there is any correlation between the residuals and the predicted values and whether the variance of the residuals changes significantly. If not, then the red line should be horizontal. If there is any correlation or change in variance then the red line will not be horizontal. This plot is fine.

- The last graph shows the Cook’s distance and tests if any one point has an unnecessarily large effect on the fit. A rule of thumb is that if any value is larger than 1.0, then it might have a large effect on the model. If not, then no point has undue influence. This plot is good. There are different ways to determine the threshold (apart from simply setting it to 1) and in this plot the blue dashed line is at

4/n, withnbeing the number of samples. At this threshold there are some data points that may be influential, but I personally find this threshold rather strict.

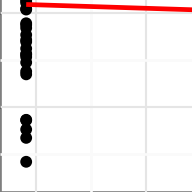

Unfortunately Python doesn’t provide a convenient way of displaying the same diagnostic plots as R does.

I created a function dgplots() (which stands for Diagnostic Plots - very original I know…) that does this for you. All you need to do is create a linear model, get the fit and feed that to the dgplots() function.

You can of course plot the model values yourself by extracting them from the linear model fit, but this should provide a convenient way to avoid that kind of stuff.

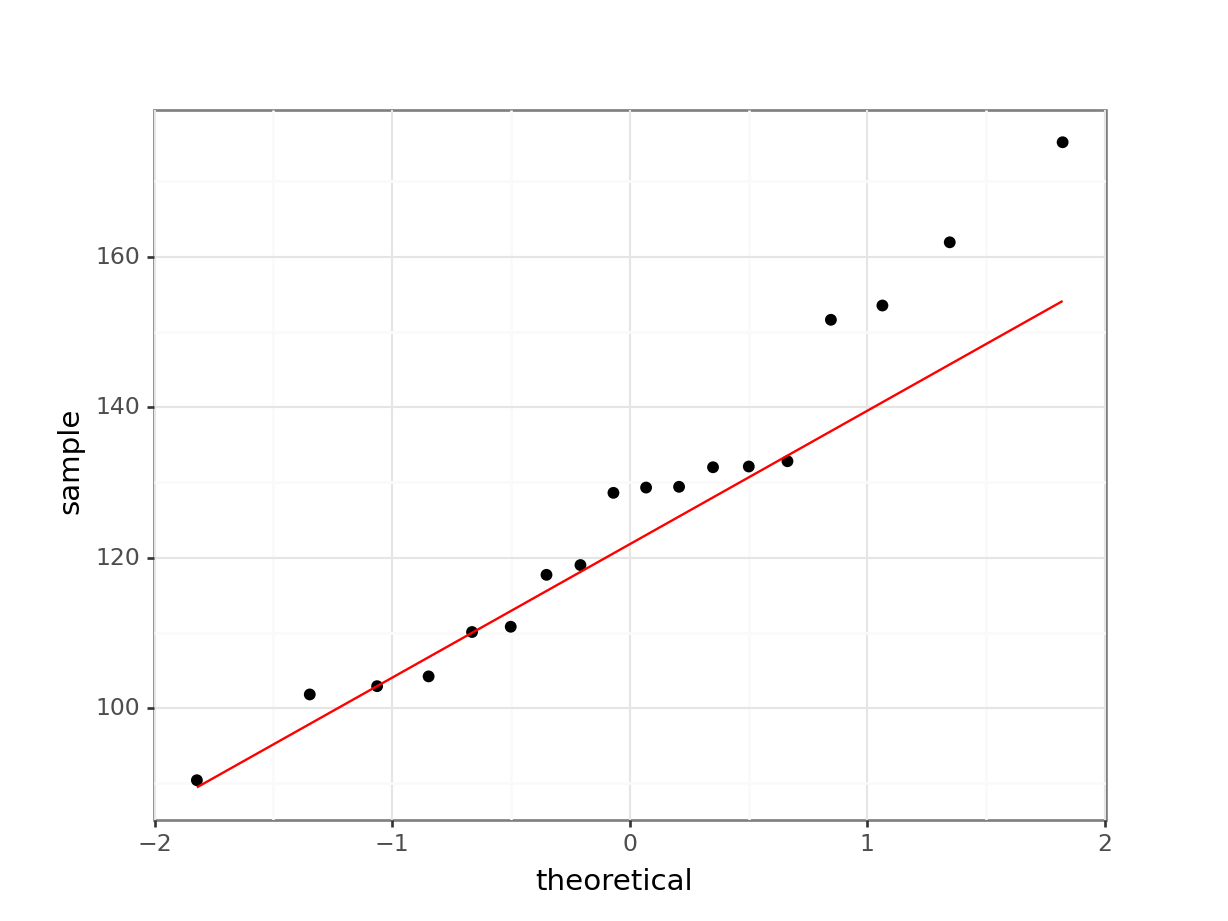

dgplots(lm_oystercatcher_py)

- The top left graph plots the Residuals plot. If the data are best explained by a linear line then there should be a uniform distribution of points above and below the horizontal blue line (and if there are sufficient points then the red line, which is a smoother line, should be on top of the blue line). This plot looks pretty good.

- The top right graph shows the Q-Q plot which allows a visual inspection of normality. If the residuals are normally distributed, then the points should lie on the diagonal blue line. This plot looks good.

- The bottom left Location-Scale graph allows us to investigate whether there is any correlation between the residuals and the predicted values and whether the variance of the residuals changes significantly. If not, then the red line should be horizontal. If there is any correlation or change in variance then the red line will not be horizontal. This plot is fine.

- The last graph shows the Influential points and tests if any one point has an unnecessarily large effect on the fit. Here we’re using the Cook’s distance as a measure. A rule of thumb is that if any value is larger than 1.0, then it might have a large effect on the model. If not, then no point has undue influence. This plot is good. There are different ways to determine the threshold (apart from simply setting it to 1) and in this plot the blue dashed line is at

4/n, withnbeing the number of samples. At this threshold there are some data points that may be influential, but I personally find this threshold rather strict.

We can see that these graphs are very much in line with what we’ve just looked at using the test, which is reassuring. The groups all appear to have the same spread of data, and the Q-Q plot shows that the assumption of normality is alright.

At this stage, I should point out that I nearly always stick with the graphical method for assessing the assumptions of a test. Assumptions are rarely either completely met or not met and there is always some degree of personal assessment.

Whilst the formal statistical tests (like Shapiro-Wilk) are technically fine, they can often create a false sense of things being absolutely right or wrong in spite of the fact that they themselves are still probabilistic statistical tests. In these exercises we are using both approaches whilst you gain confidence and experience in interpreting the graphical output and whilst it is absolutely fine to use both in the future I would strongly recommend that you don’t rely solely on the statistical tests in isolation.

10.6 Implement and interpret the test

As is often the case, performing the actual statistical test is the least of your efforts.

In R we perform the ANOVA on the linear model object, lm_oystercatcher in this case. We do this with the anova() function:

anova(lm_oystercatcher)Analysis of Variance Table

Response: feeding

Df Sum Sq Mean Sq F value Pr(>F)

site 2 1878.02 939.01 150.78 < 2.2e-16 ***

Residuals 117 728.63 6.23

---

Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1This takes the linear model (i.e. finds the means of the three groups and calculates a load of intermediary data that we need for the statistical analysis) and stores this information in an R object (which we’ve called lm_oystercatcher, but which you can call what you like).

In the output:

- The 1st line just tells you the that this is an ANOVA test

- The 2nd line tells you what the response variable is (in this case feeding)

- The 3rd, 4th and 5th lines are an ANOVA table which contain some useful values:

- The

Dfcolumn contains the degrees of freedom values on each row, 2 and 117 (which we can use for the reporting) - The

Fvalue column contains the F statistic, 150.78 (which again we’ll need for reporting). - The p-value is 2.2e-16 and is the number directly under the

Pr(>F)on the 4th line (to be precise, it is 4.13e-33 but anything smaller than 2.2e-16 gets reported as< 2.2e-16). - The other values in the table (in the

Sum SqandMean Sq) columns are used to calculate the F statistic itself and we don’t need to know these.

- The

- The 6th line has some symbolic codes to represent how big (small) the p-value is; so, a p-value smaller than 0.001 would have a *** symbol next to it (which ours does). Whereas if the p-value was between 0.01 and 0.05 then there would simply be a * character next to it, etc. Thankfully we can all cope with actual numbers and don’t need a short-hand code to determine the reporting of our experiments (please tell me that’s true…!)

There are different ways of conducting an ANOVA in Python, with scipy.stats proving an option. However, I find that the anova() function in pingouin provides the easiest and most-detailed option to do this.

It takes the following arguments:

dv: dependent variable (response variable; in our casefeeding)between: between-subject factor (predictor variable; in our casesite)data: which function doesn’t!?detailed: optionalTrueorFalse, we’re setting it toTruebecause we like to know what we’re doing!

pg.anova(dv = "feeding",

between = "site",

data = oystercatcher_py,

detailed = True) Source SS DF MS F p-unc np2

0 site 1878.015371 2 939.007685 150.782449 4.128088e-33 0.720473

1 Within 728.625249 117 6.227566 NaN NaN NaNThis creates a linear model based on the data, i.e. finds the means of the three groups and calculates a load of intermediary data that we need for the statistical analysis.

In the output:

Source: Factor names - in our case these are the different sites (site)SS: Sums of squares (we’ll get to that in a bit)DF: Degrees of freedom (at the moment only used for reporting)MS: Mean squaresF: Our F-statisticp-unc: p-value (uncstands for “uncorrected” - more on multiple testing correction later)np2: Partial eta-square effect sizes (more on this later)

Alternatively, and we’ll be using this method later on in the course, you can perform an ANOVA on the lm_oystercatcher_py object we created earlier.

This uses the sm.stats.anova_lm() function from statsmodels. As you’ll see, the output is very similar:

sm.stats.anova_lm(lm_oystercatcher_py) df sum_sq mean_sq F PR(>F)

C(site) 2.0 1878.015371 939.007685 150.782449 4.128088e-33

Residual 117.0 728.625249 6.227566 NaN NaNAgain, the p-value is what we’re most interested in here and shows us the probability of getting samples such as ours if the null hypothesis were actually true.

Since the p-value is very small (much smaller than the standard significance level of 0.05) we can say “that it is very unlikely that these three samples came from the same parent distribution” and as such we can reject our null hypothesis and state that:

A one-way ANOVA showed that the mean feeding rate of oystercatchers differed significantly between locations (p = 4.13e-33).

10.7 Post-hoc testing (Tukey’s range test)

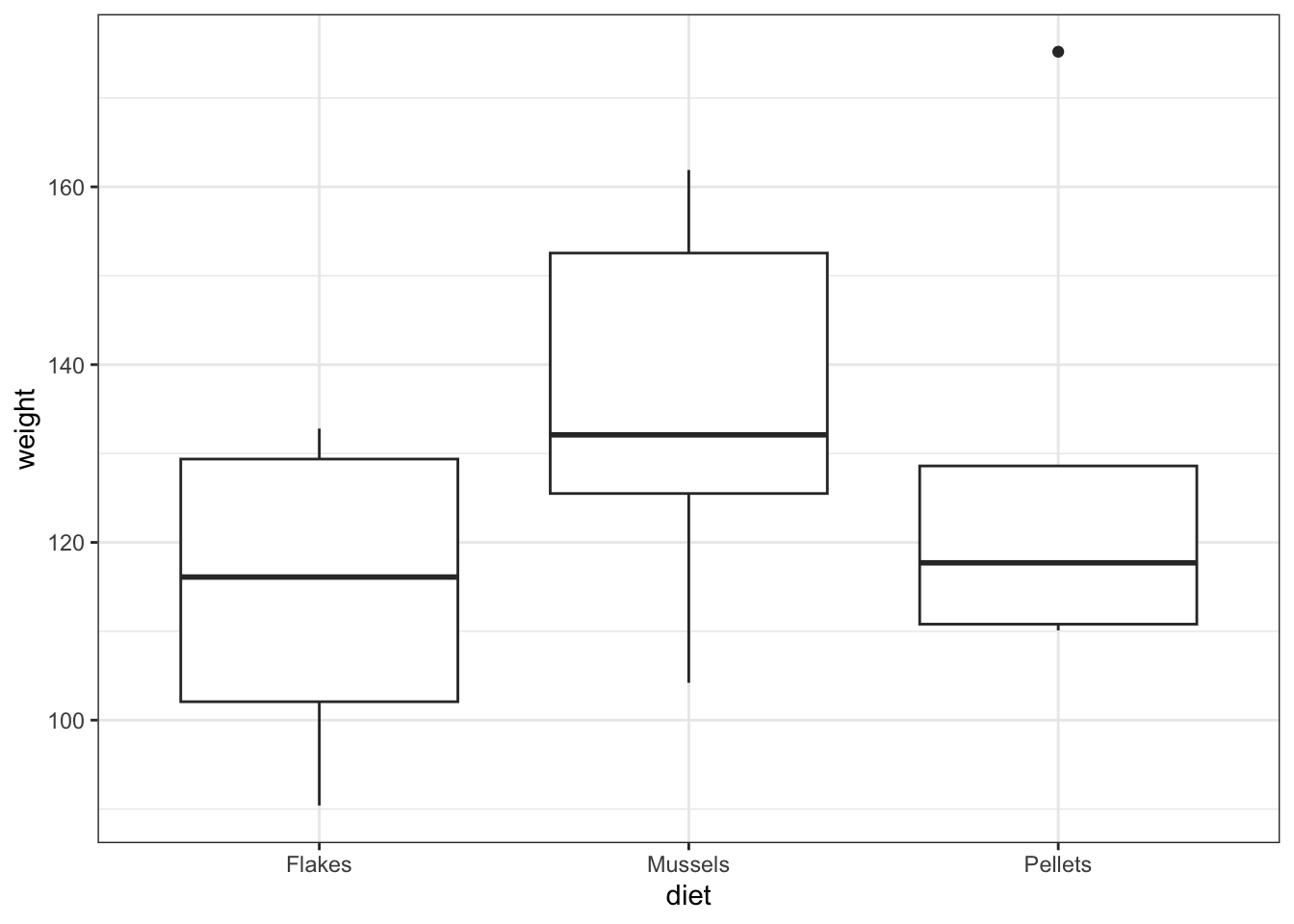

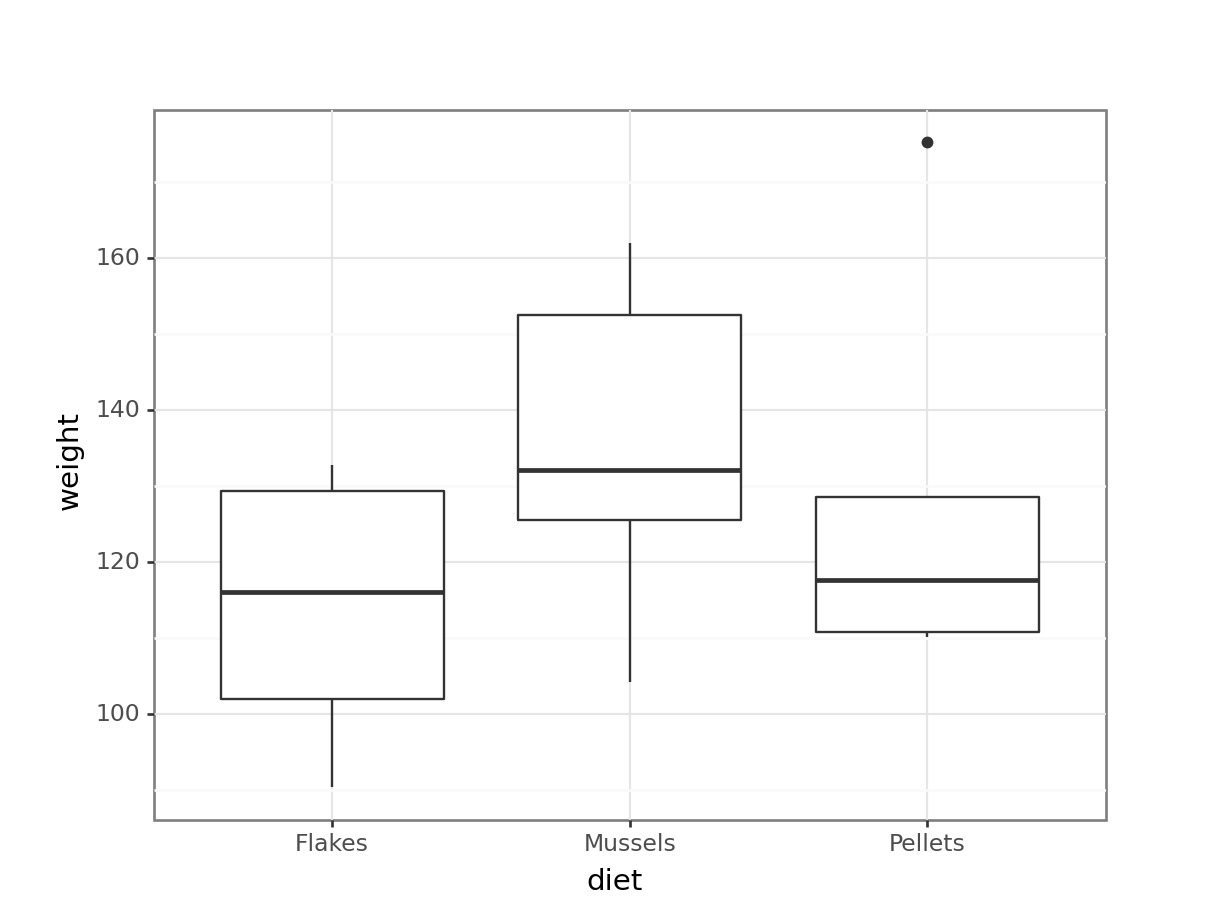

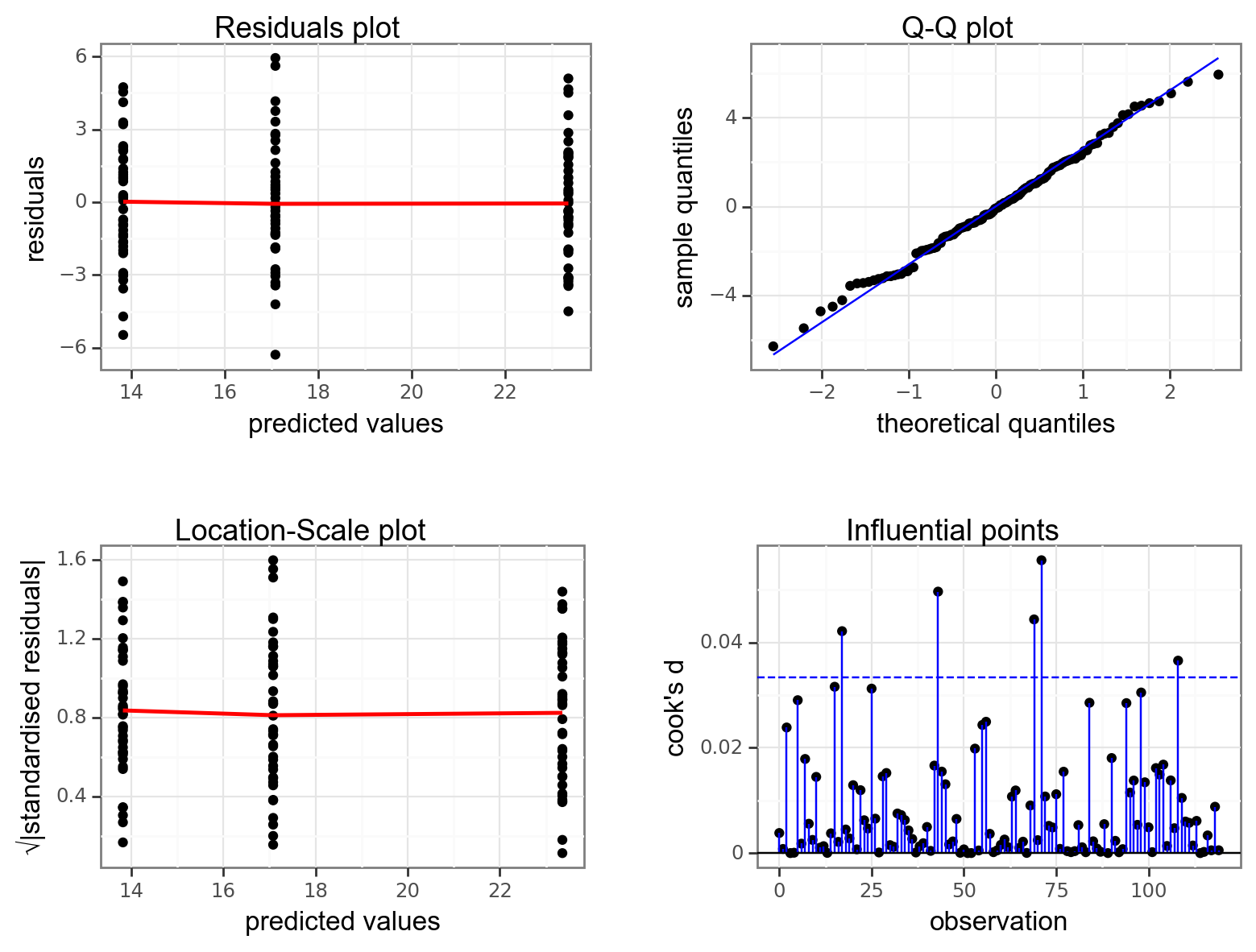

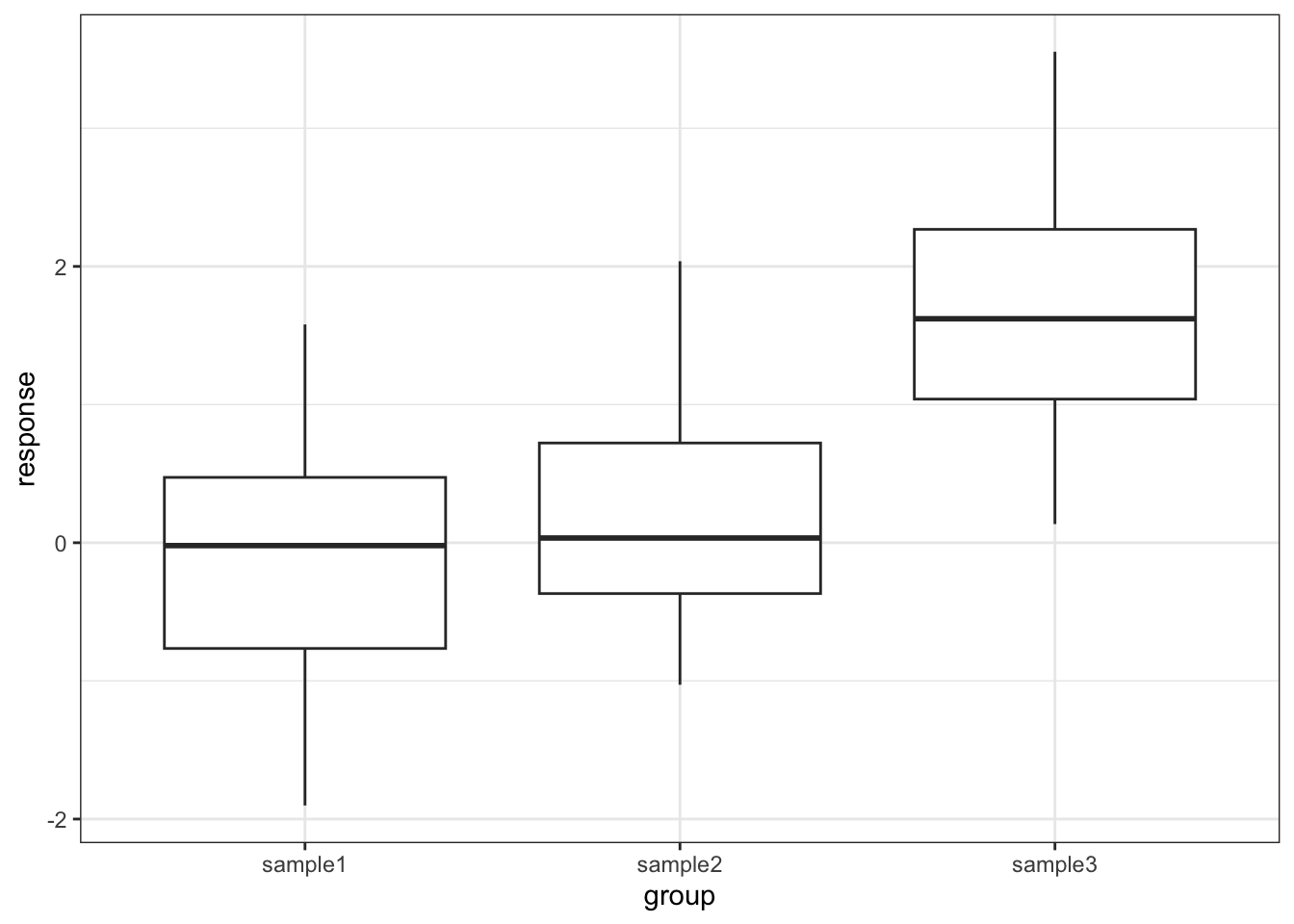

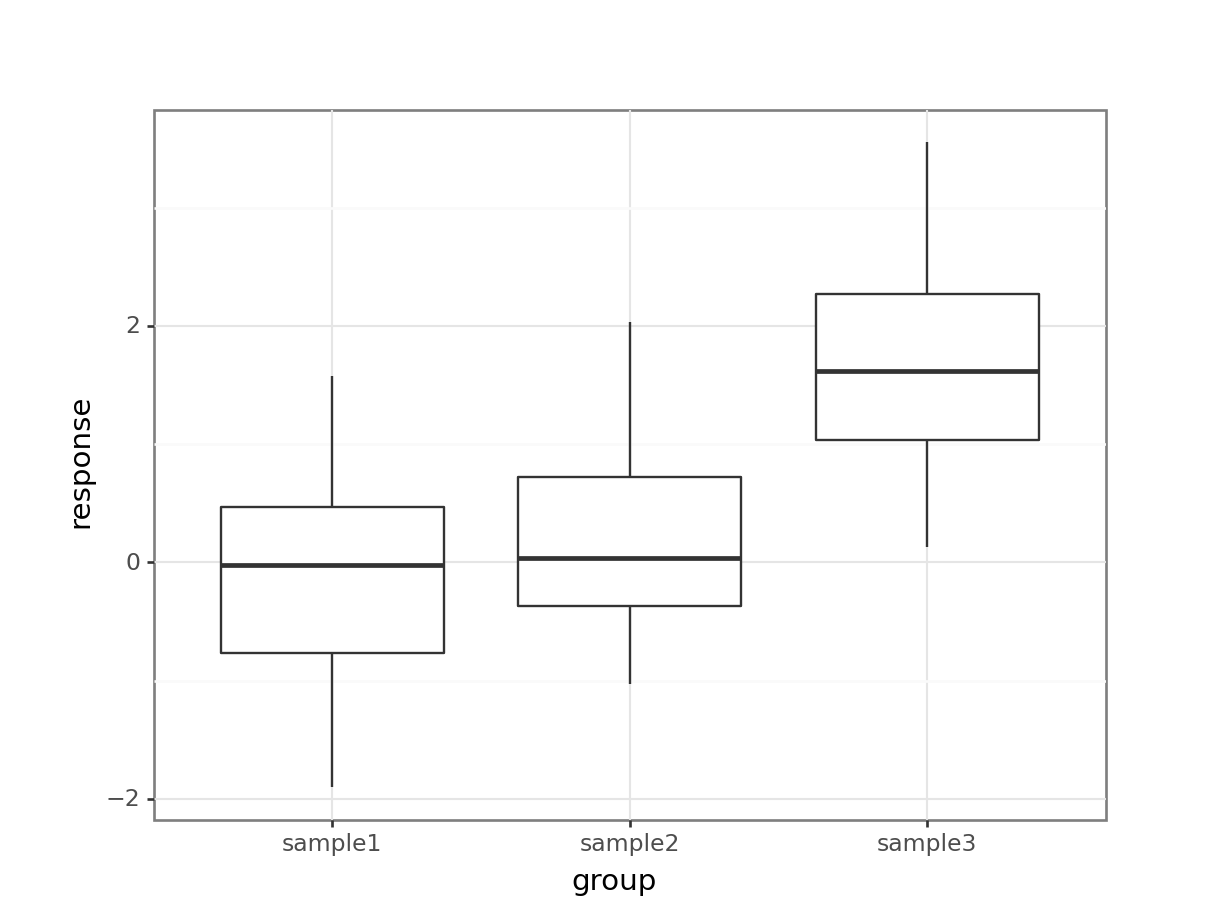

One drawback with using an ANOVA test is that it only tests to see if all of the means are the same. If we get a significant result using ANOVA then all we can say is that not all of the means are the same, rather than anything about how the pairs of groups differ. For example, consider the following box plot for three samples.

Each group is a random sample of 20 points from a normal distribution with variance 1. Groups 1 and 2 come from a parent population with mean 0 whereas group 3 come from a parent population with mean 2. The data clearly satisfy the assumptions of an ANOVA test.

How do we find out if there are any differences between these groups and, if so, which groups are different from each other?

10.7.1 Read in data and plot

# load the data

tukey <- read_csv("data/CS2-tukey.csv")Rows: 60 Columns: 2

── Column specification ────────────────────────────────────────────────────────

Delimiter: ","

chr (1): group

dbl (1): response

ℹ Use `spec()` to retrieve the full column specification for this data.

ℹ Specify the column types or set `show_col_types = FALSE` to quiet this message.# have a look at the data

tukey# A tibble: 60 × 2

response group

<dbl> <chr>

1 1.58 sample1

2 0.380 sample1

3 -0.997 sample1

4 -0.771 sample1

5 0.169 sample1

6 -0.698 sample1

7 -0.167 sample1

8 1.38 sample1

9 -0.839 sample1

10 -1.05 sample1

# ℹ 50 more rows# plot the data

ggplot(tukey,

aes(x = group, y = response)) +

geom_boxplot()

# load the data

tukey_py = pd.read_csv("data/CS2-tukey.csv")

# have a look at the data

tukey_py.head() response group

0 1.580048 sample1

1 0.379544 sample1

2 -0.996505 sample1

3 -0.770799 sample1

4 0.169046 sample1# plot the data

(ggplot(tukey_py,

aes(x = "group",

y = "response")) +

geom_boxplot())

10.7.2 Test for a significant difference in group means

# create a linear model

lm_tukey <- lm(response ~ group,

data = tukey)

# perform an ANOVA

anova(lm_tukey)Analysis of Variance Table

Response: response

Df Sum Sq Mean Sq F value Pr(>F)

group 2 33.850 16.9250 20.16 2.392e-07 ***

Residuals 57 47.854 0.8395

---

Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1pg.anova(dv = "response",

between = "group",

data = tukey_py,

detailed = True) Source SS DF MS F p-unc np2

0 group 33.850044 2 16.925022 20.159922 2.391626e-07 0.414302

1 Within 47.853670 57 0.839538 NaN NaN NaNHere we have a p-value of 2.39 \(\times\) 10-7 and so the test has very conclusively rejected the hypothesis that all means are equal.

However, this was not due to all of the sample means being different, but rather just because one of the groups is very different from the others. In order to drill down and investigate this further we use a new test called Tukey’s range test (or Tukey’s honest significant difference test – this always makes me think of some terrible cowboy/western dialogue).

This will compare all of the groups in a pairwise fashion and reports on whether a significant difference exists.

10.7.3 Performing Tukey’s test

To perform Tukey’s range test we can use the tukey_hsd() function from the rstatix package. Note, there is a TukeyHSD() function in base R as well, but the tukey_hsd() function can take a linear model object as input, whereas the TukeyHSD() function cannot. This makes the tukey_hsd() function a bit easier to work with.

# perform Tukey's range test on linear model

tukey_hsd(lm_tukey)# A tibble: 3 × 9

term group1 group2 null.value estimate conf.low conf.high p.adj

* <chr> <chr> <chr> <dbl> <dbl> <dbl> <dbl> <dbl>

1 group sample1 sample2 0 0.304 -0.393 1.00 0.55

2 group sample1 sample3 0 1.72 1.03 2.42 0.000000522

3 group sample2 sample3 0 1.42 0.722 2.12 0.0000246

# ℹ 1 more variable: p.adj.signif <chr>The tukey_hsd() function takes our linear model (lm_tukey) as its input. The output is a pair-by-pair comparison between the different groups (samples 1 to 3). We are interested in the p.adj column, which gives us the adjusted p-value. The null hypothesis in each case is that there is no difference in the mean between the two groups.

pg.pairwise_tukey(dv = "response",

between = "group",

data = tukey_py).transpose() 0 1 2

A sample1 sample1 sample2

B sample2 sample3 sample3

mean(A) -0.049878 -0.049878 0.253878

mean(B) 0.253878 1.673481 1.673481

diff -0.303756 -1.723359 -1.419603

se 0.289748 0.289748 0.289748

T -1.048347 -5.947789 -4.899442

p-tukey 0.549801 0.000001 0.000025

hedges -0.32493 -1.843488 -1.518558The dv argument is the response variable, whereas the between argument defines the explanatory variable.

We .transpose() the data, so we can look at the output a bit easier. Doing so, we focus on the p-tukey values.

As we can see that there isn’t a significant difference between sample1 and sample2 but there is a significant difference between sample1 and sample3, as well as sample2 and sample3. This matches with what we expected based on the box plot.

10.7.4 Assumptions

When to use Tukey’s range test is a matter of debate (strangely enough a lot of statistical analysis techniques are currently matters of opinion rather than mathematical fact – it does explain a little why this whole field appears so bloody confusing!)

- Some people claim that we should only perform Tukey’s range test (or any other post-hoc tests) if the preceding ANOVA test showed that there was a significant difference between the groups and that if the ANOVA test had not shown any significant differences between groups then we would have to stop there.

- Other people say that this is rubbish and we can do whatever we like as long as we tell people what we did.

The background to this is rather involved but one of the reasons for this debate is to prevent so-called data-dredging or p-hacking. This is where scientists/analysts are so fixated on getting a “significant” result that they perform a huge variety of statistical techniques until they find one that shows that their data is significant (this was a particular problem in psychological studies for while – not to point fingers though, they are working hard to sort their stuff out. Kudos!).

Whether you should use post-hoc testing or not will depend on your experimental design and the questions that you’re attempting to answer.

Tukey’s range test, when we decide to use it, requires the same three assumptions as an ANOVA test:

- Normality of distributions

- Equality of variance between groups

- Independence of observations

10.8 Exercises

10.8.1 Lobster weight

10.10 Summary

- We use an ANOVA to test if there is a difference in means between multiple continuous response variables

- We check assumptions with diagnostic plots and check if the residuals are normally distributed

- We use post-hoc testing to check for significant differences between the group means, for example using Tukey’s range test