# Load required R libraries

library(tidyverse)

library(performance)

# Read in the required data

islands <- read_csv("data/islands.csv")

seatbelts <- read_csv("data/seatbelts.csv")12 Analysing count data

- Be able to identify count data

- Fit a Poisson regression model with count data

- Assess whether assumptions are met and test significance of the model

12.1 Context

We have now covered analysing binary responses and learned how to assess the quality and appropriateness of the resulting models. In the next sections we extend this further, by looking at a different type of response variable: count data. The humble count may look innocent, but often hides a whole lot of nuances that are hard to spot. So, pay attention!

12.2 Section setup

We’ll use the following libraries and data:

# Load required Python libraries

import pandas as pd

import math

import statsmodels.api as sm

import statsmodels.formula.api as smf

from scipy.stats import *

from plotnine import *

# Read in the required data

islands = pd.read_csv("data/islands.csv")

seatbelts = pd.read_csv("data/seatbelts.csv")The worked example in this chapter will use the islands dataset.

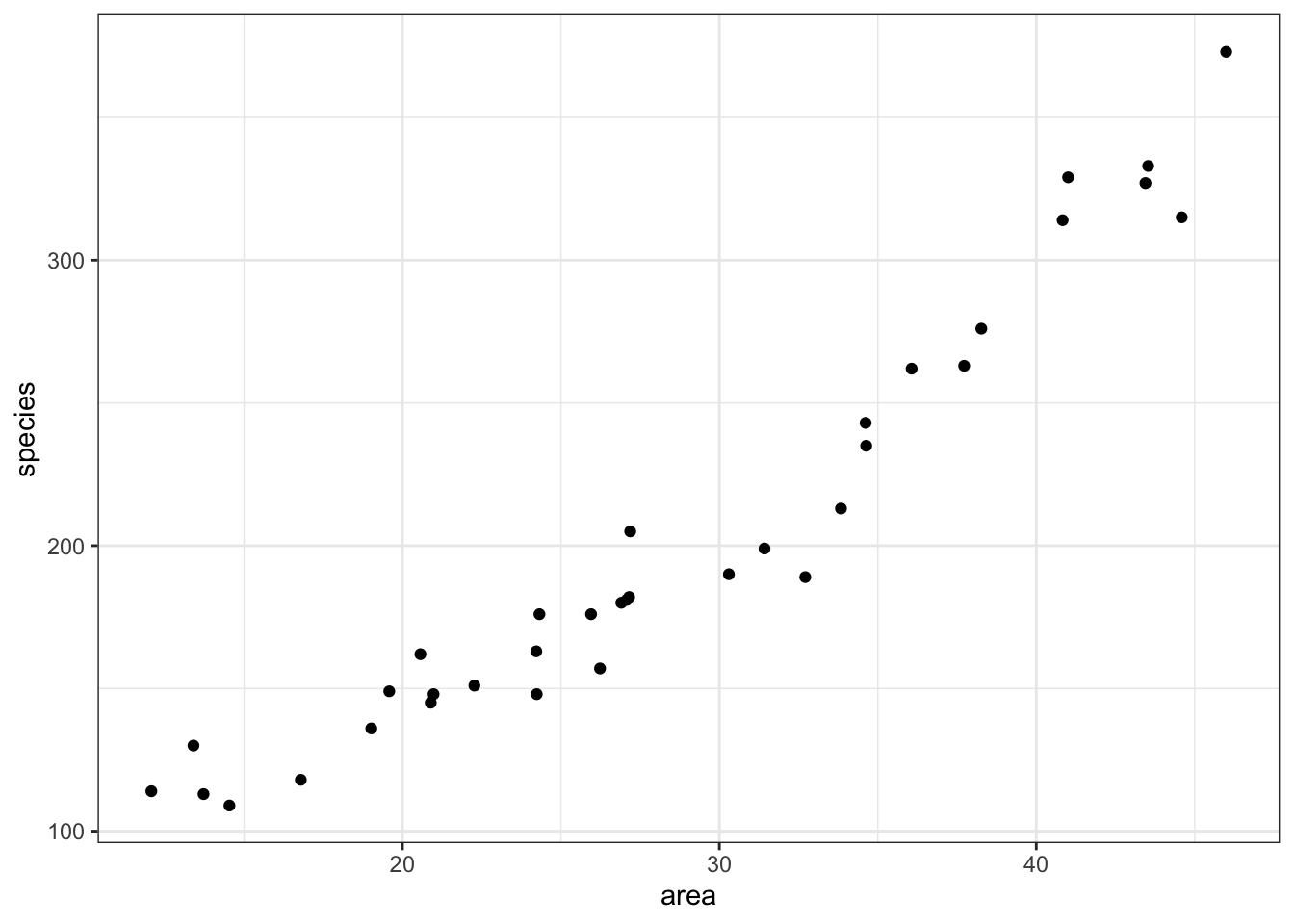

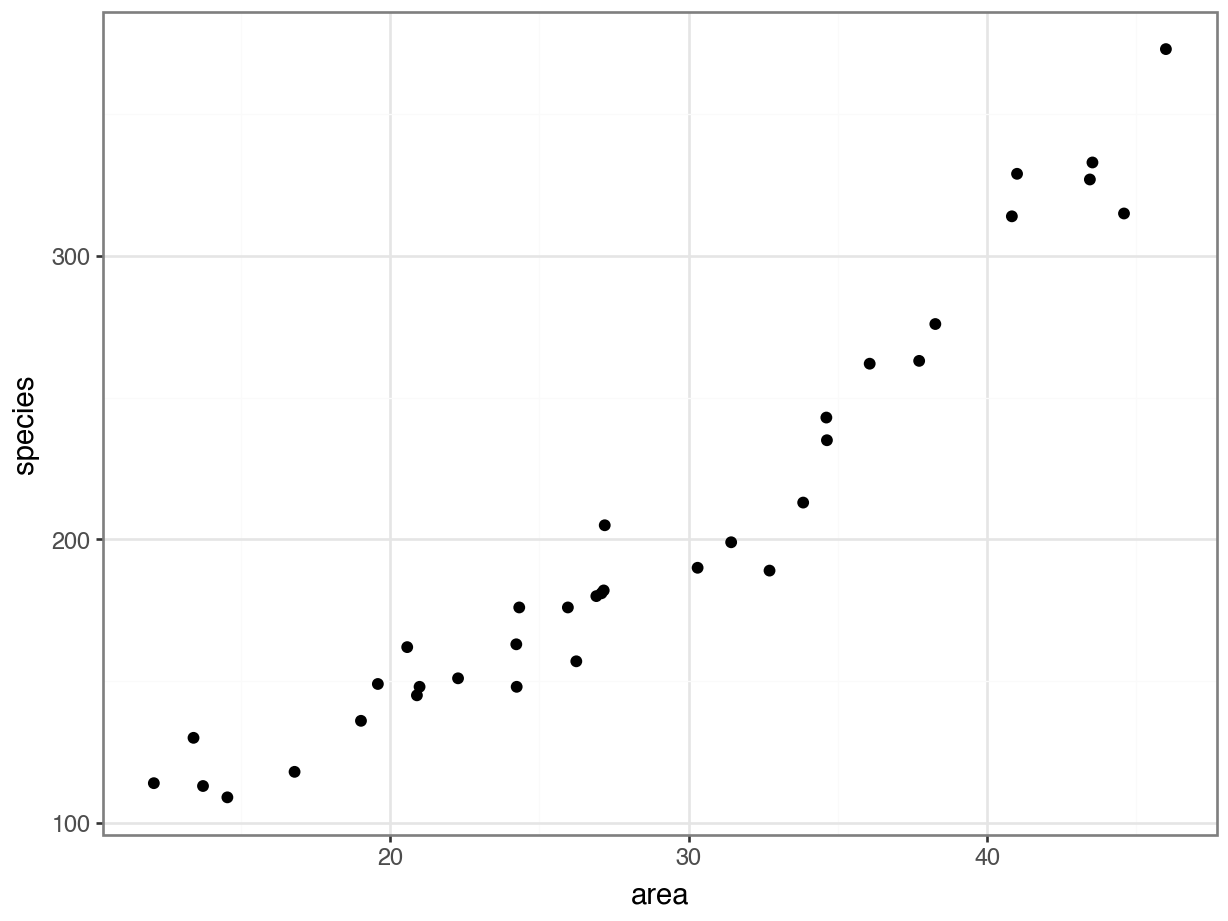

This is a dataset comprising 35 observations of two variables. For each small island in the dataset, the researcher recorded the number of unique species; they want to know if this can be predicted from the area (km2) of the island.

12.3 Load and visualise the data

First we load the data, then we visualise it.

islands <- read_csv("data/islands.csv")

head(islands)# A tibble: 6 × 2

species area

<dbl> <dbl>

1 114 12.1

2 130 13.4

3 113 13.7

4 109 14.5

5 118 16.8

6 136 19.0islands = pd.read_csv("data/islands.csv")

islands.head() species area

0 114 12.076133

1 130 13.405439

2 113 13.723525

3 109 14.540359

4 118 16.792122We can plot the data:

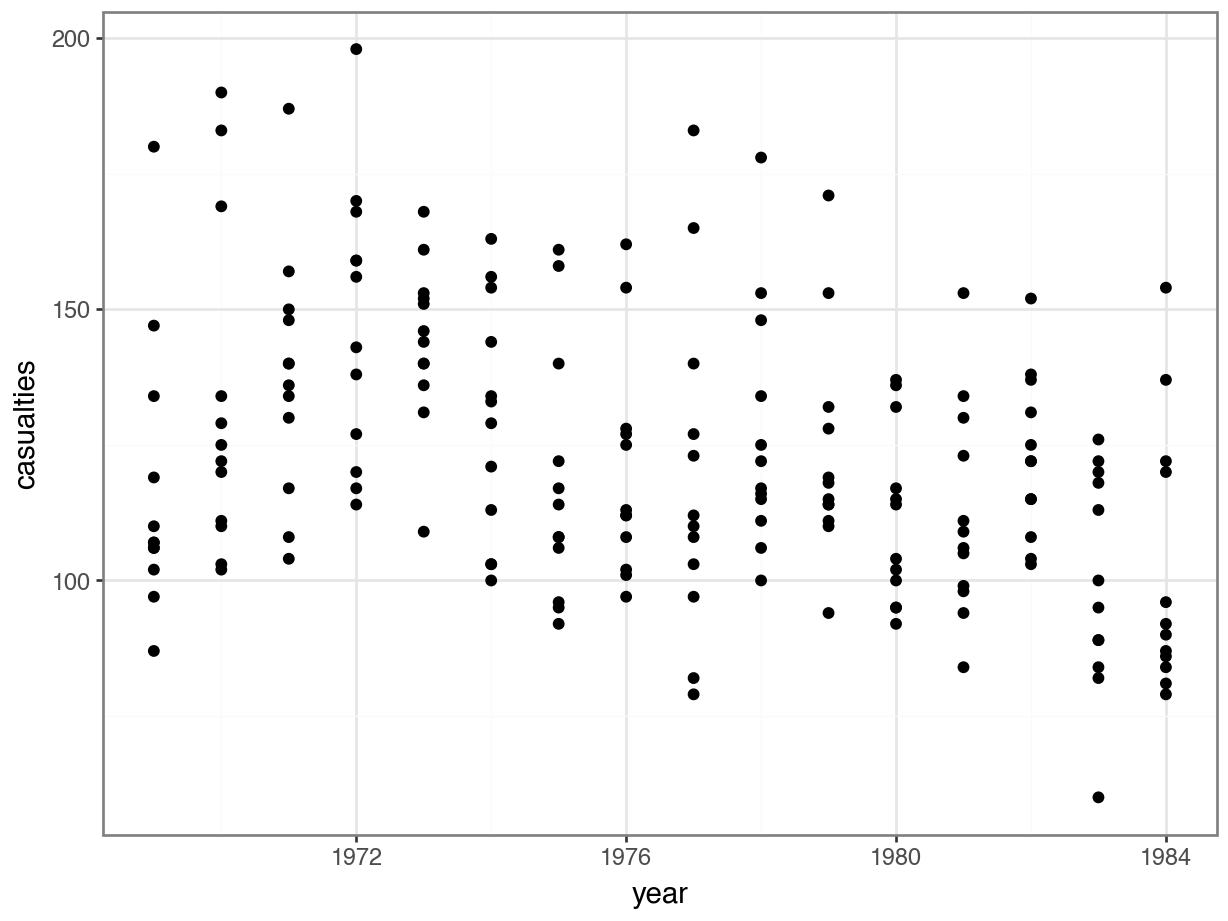

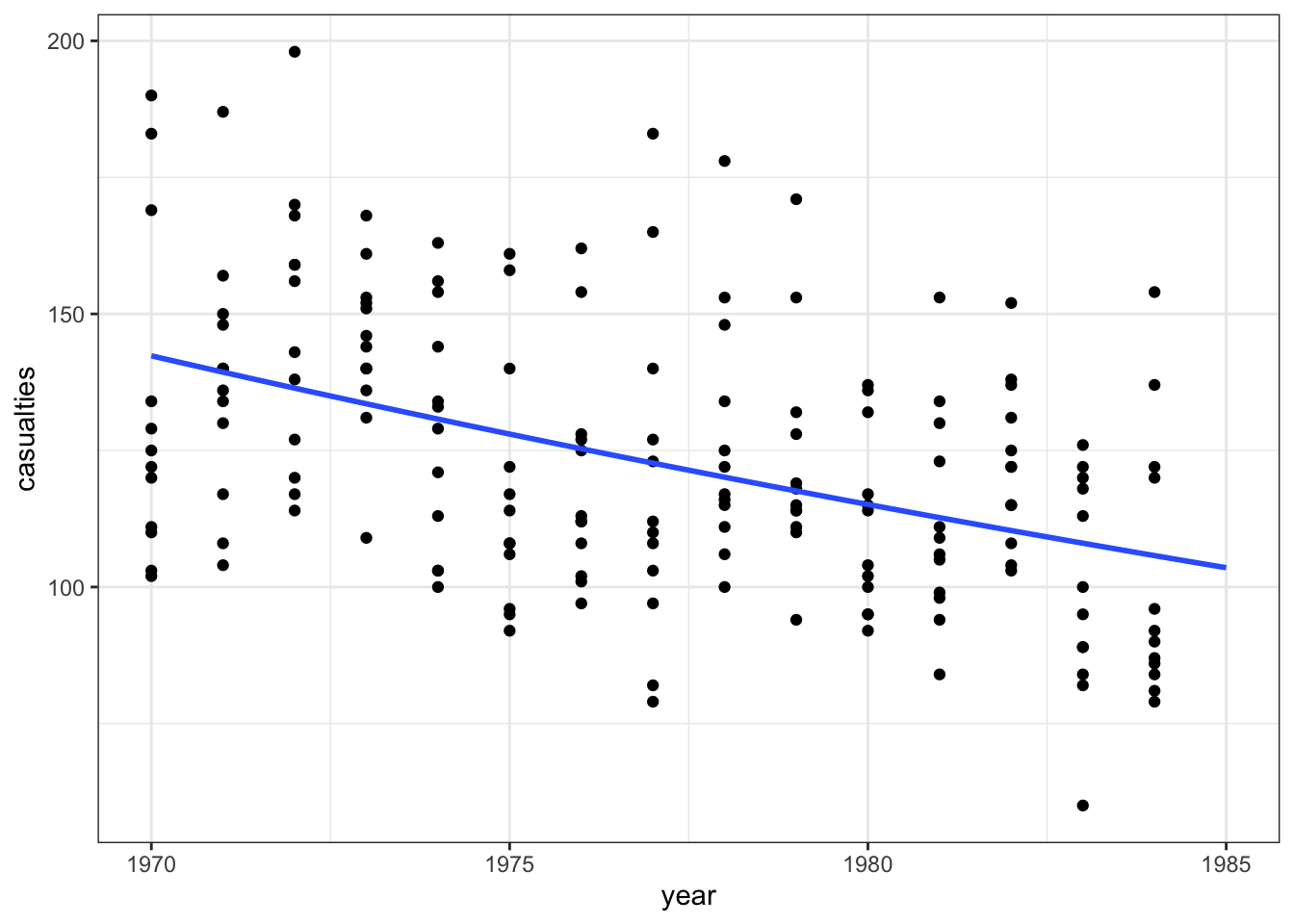

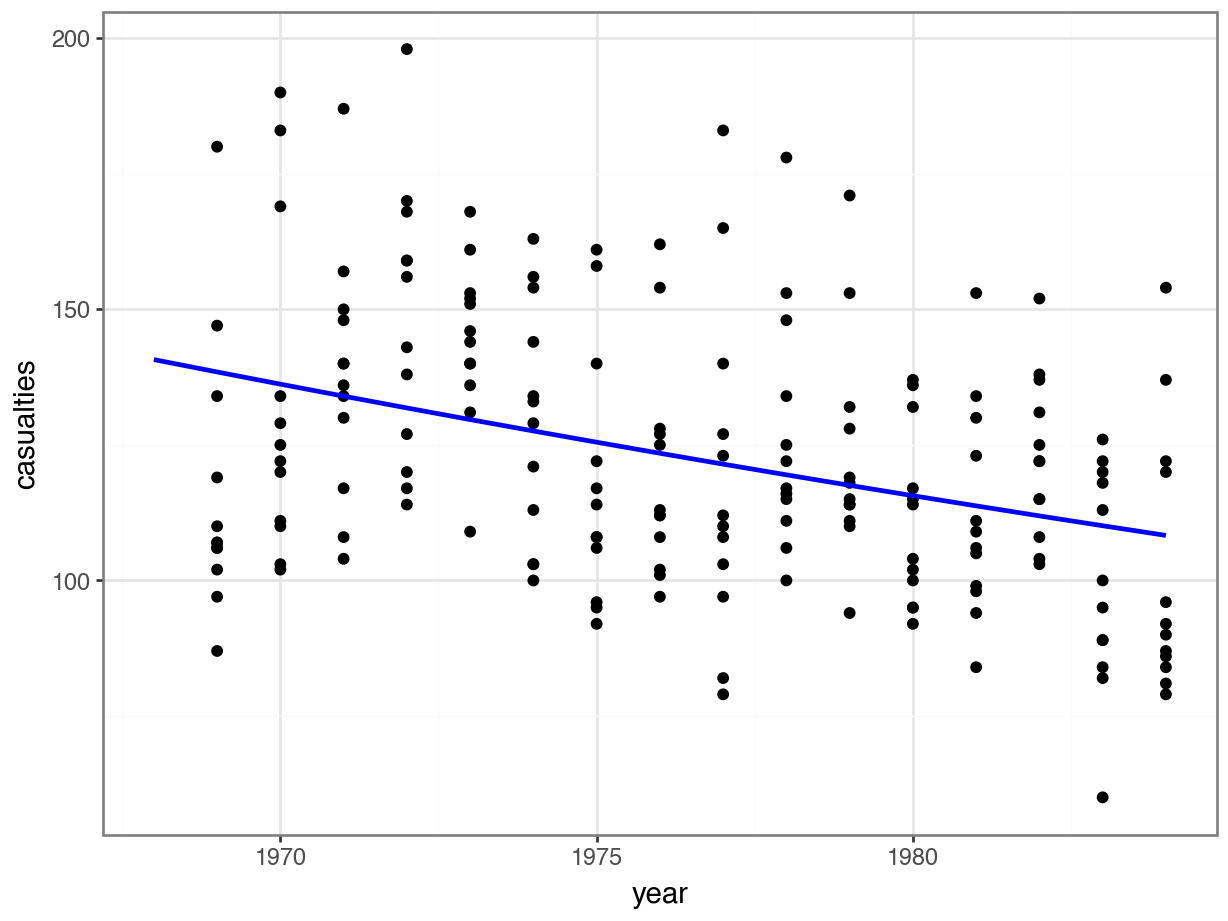

Each dot on the scatterplot represents an island in the dataset.

It looks as though area definitely has some relationship with the number of species that we observe.

Next step is to try to model these data.

12.4 Constructing a model

The species variable is the outcome or response (since we’re interested in whether it’s predicted by area).

It qualifies as a count variable. It’s bounded at 0 and \(\infty\), and can only jump up in integers - in other words, you can’t have less than 0 species, or 6.3 species.

This means the best place to start is a Poisson regression. We fit these in a very similar way to a logistic regression, just with a different option specified for the family argument.

glm_isl <- glm(species ~ area,

data = islands, family = "poisson")and we look at the model summary:

summary(glm_isl)

Call:

glm(formula = species ~ area, family = "poisson", data = islands)

Coefficients:

Estimate Std. Error z value Pr(>|z|)

(Intercept) 4.241129 0.041322 102.64 <2e-16 ***

area 0.035613 0.001247 28.55 <2e-16 ***

---

Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

(Dispersion parameter for poisson family taken to be 1)

Null deviance: 856.899 on 34 degrees of freedom

Residual deviance: 30.437 on 33 degrees of freedom

AIC: 282.66

Number of Fisher Scoring iterations: 3The output is strikingly similar to the logistic regression models (who’d have guessed, eh?) and the main numbers to extract from the output are the coefficients (the two numbers underneath Estimate in the table above):

coefficients(glm_isl)(Intercept) area

4.24112904 0.03561346 model = smf.glm(formula = "species ~ area",

family = sm.families.Poisson(),

data = islands)

glm_isl = model.fit()Let’s look at the model output:

print(glm_isl.summary()) Generalized Linear Model Regression Results

==============================================================================

Dep. Variable: species No. Observations: 35

Model: GLM Df Residuals: 33

Model Family: Poisson Df Model: 1

Link Function: Log Scale: 1.0000

Method: IRLS Log-Likelihood: -139.33

Date: Fri, 25 Jul 2025 Deviance: 30.437

Time: 08:28:46 Pearson chi2: 30.3

No. Iterations: 4 Pseudo R-squ. (CS): 1.000

Covariance Type: nonrobust

==============================================================================

coef std err z P>|z| [0.025 0.975]

------------------------------------------------------------------------------

Intercept 4.2411 0.041 102.636 0.000 4.160 4.322

area 0.0356 0.001 28.551 0.000 0.033 0.038

==============================================================================The output is strikingly similar to the logistic regression models (who’d have guessed, eh?) and the main numbers to extract from the output are the coefficients (the two numbers underneath coef in the table above):

print(glm_isl.params)Intercept 4.241129

area 0.035613

dtype: float6412.4.1 The model equation

Now that we have the beta coefficients, we can place them inside an equation.

The left-hand side of this equation is the expected number of species, \(E(species)\).

On the right-hand side, we take the linear equation \(\beta_0 + \beta_1 * x_1\) and embed it inside the inverse link function, which in this case is just the exponential function.

It looks like this:

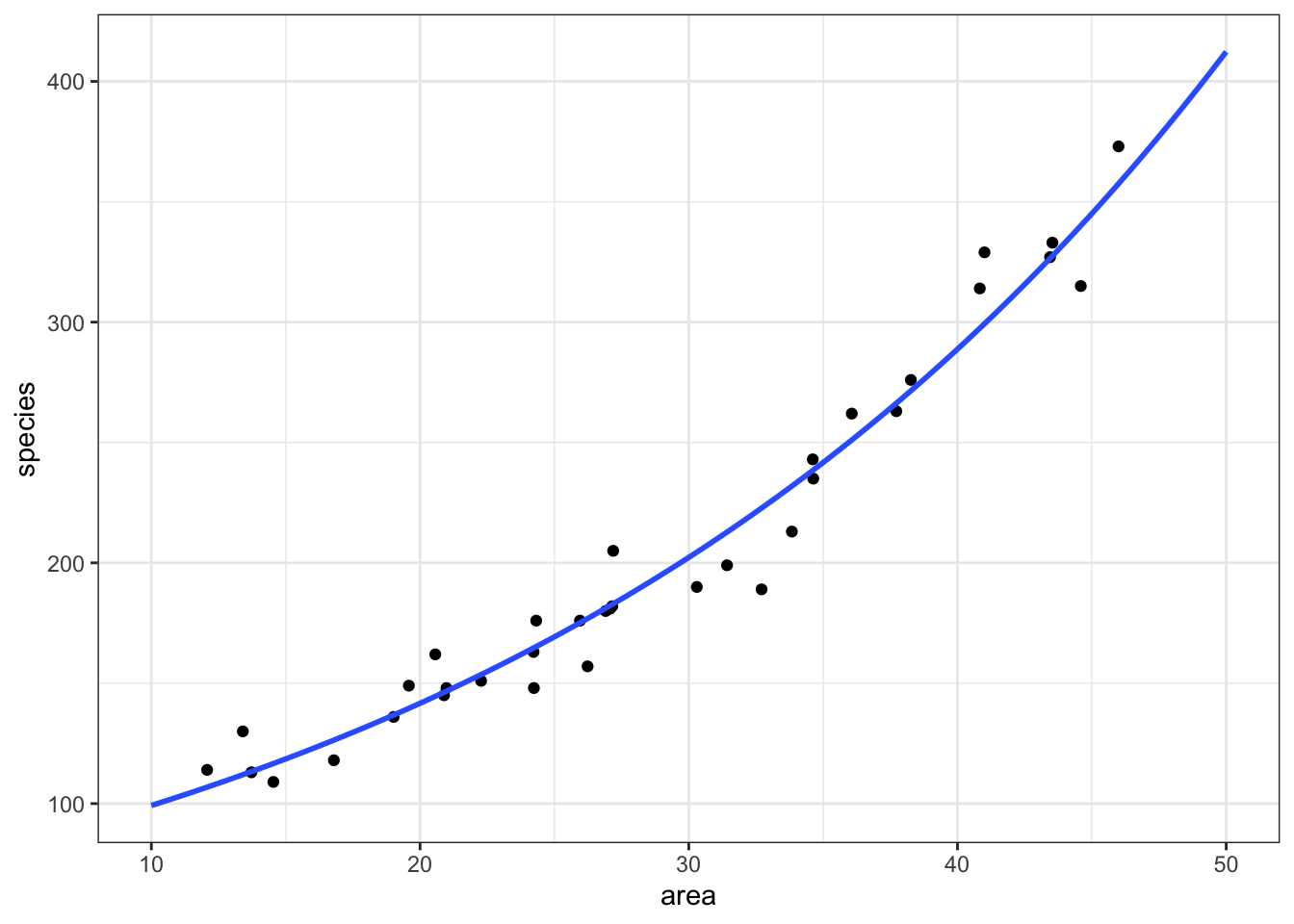

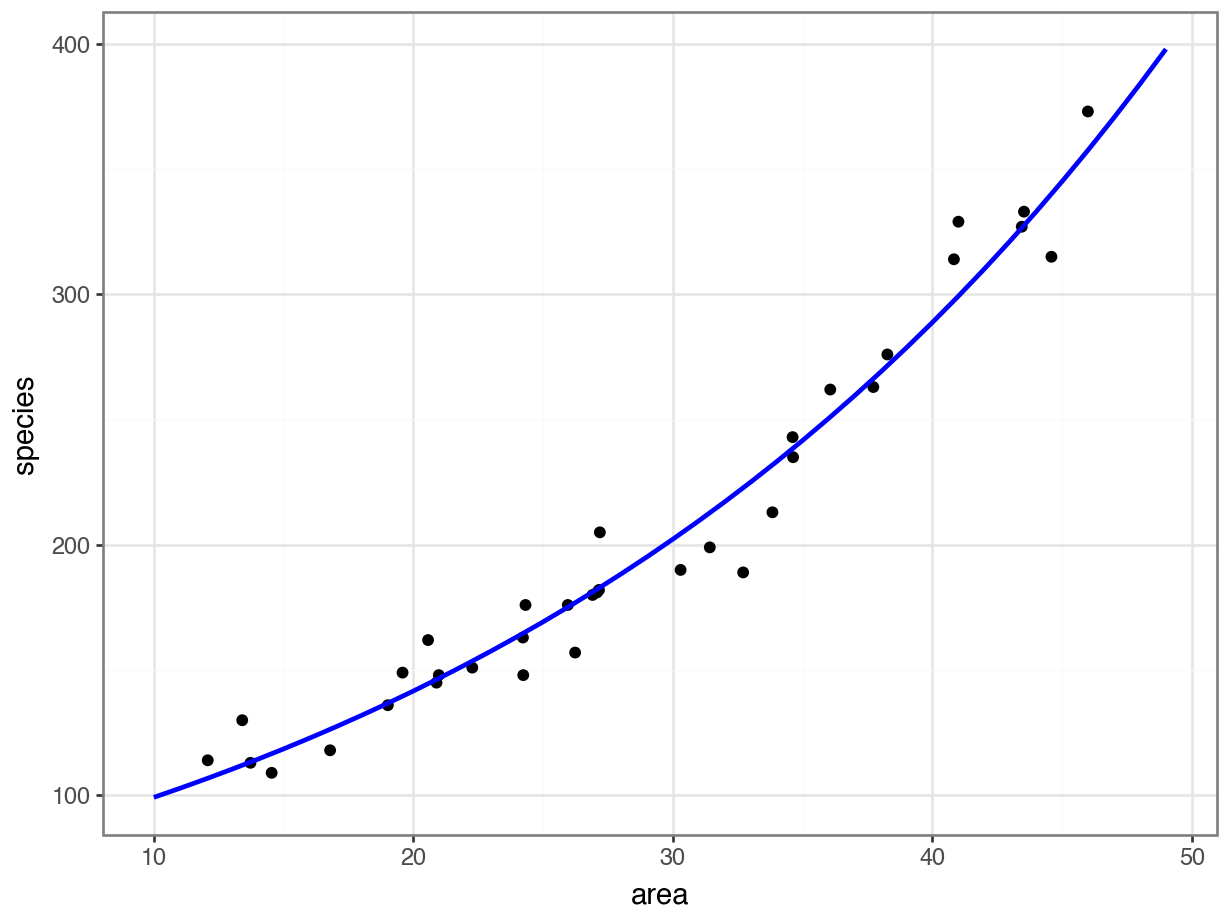

\[ E(species) = \exp(4.24 + 0.036 \times area) \]

Interpreting this requires a bit of thought (not much, but a bit).

The intercept coefficient, 4.24, is related to the number of species we would expect on an island of zero area. But in order to turn this number into something meaningful we have to exponentiate it.

Since \(\exp(4.24) \approx 69\), we can say that the baseline number of species the model expects on any island is 69.

The coefficient of area is the fun bit. For starters we can see that it is a positive number which does mean that increasing area leads to increasing numbers of species. Good so far.

But what does the value 0.036 actually mean? Well, if we exponentiate it as well, we get \(\exp(0.036) \approx 1.04\). This means that for every increase in area of 1 km2 (the original units of the area variable), the number of species on the island is multiplied by 1.04.

So, an island of area km2 will have \(1.04 \times 69 \approx 72\) species.

12.5 Plotting the Poisson regression

First, we produce a set of predictions, which we can then plot.

model = pd.DataFrame({'area': list(range(10, 50))})

model["pred"] = glm_isl.predict(model)

model.head() area pred

0 10 99.212463

1 11 102.809432

2 12 106.536811

3 13 110.399326

4 14 114.40187712.6 Assumptions & model fit

As a reminder from last chapter, we want to consider the following things to assess whether we’ve fit the right model:

- The distribution of the response variable

- The link function

- Independence

- Influential points

(We don’t need to worry about collinearity here - there’s only one predictor!)

We’re also going to talk about dispersion, which really comes into play when talking about count data - we’ll get to that after we’ve run through the stuff that’s familiar.

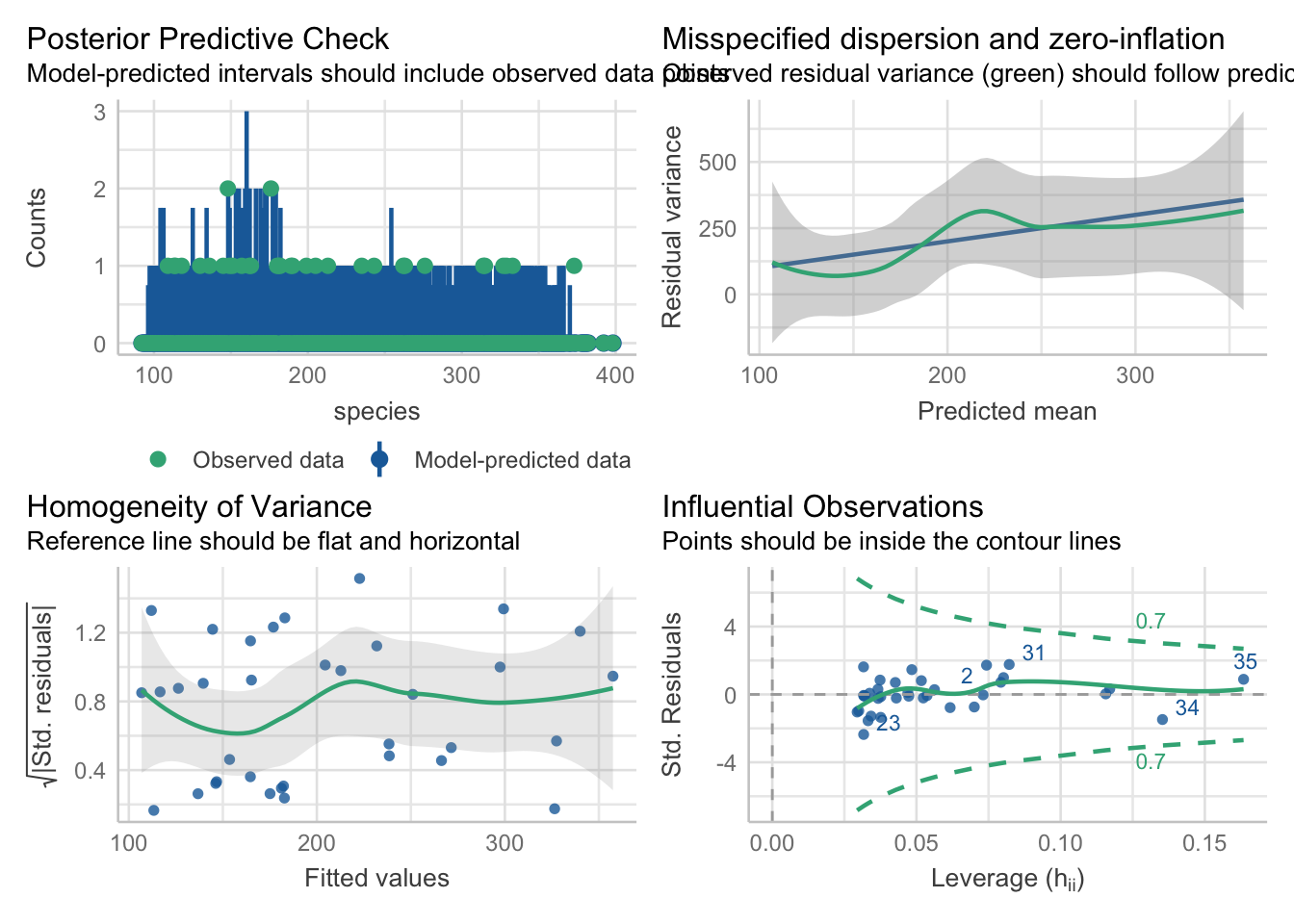

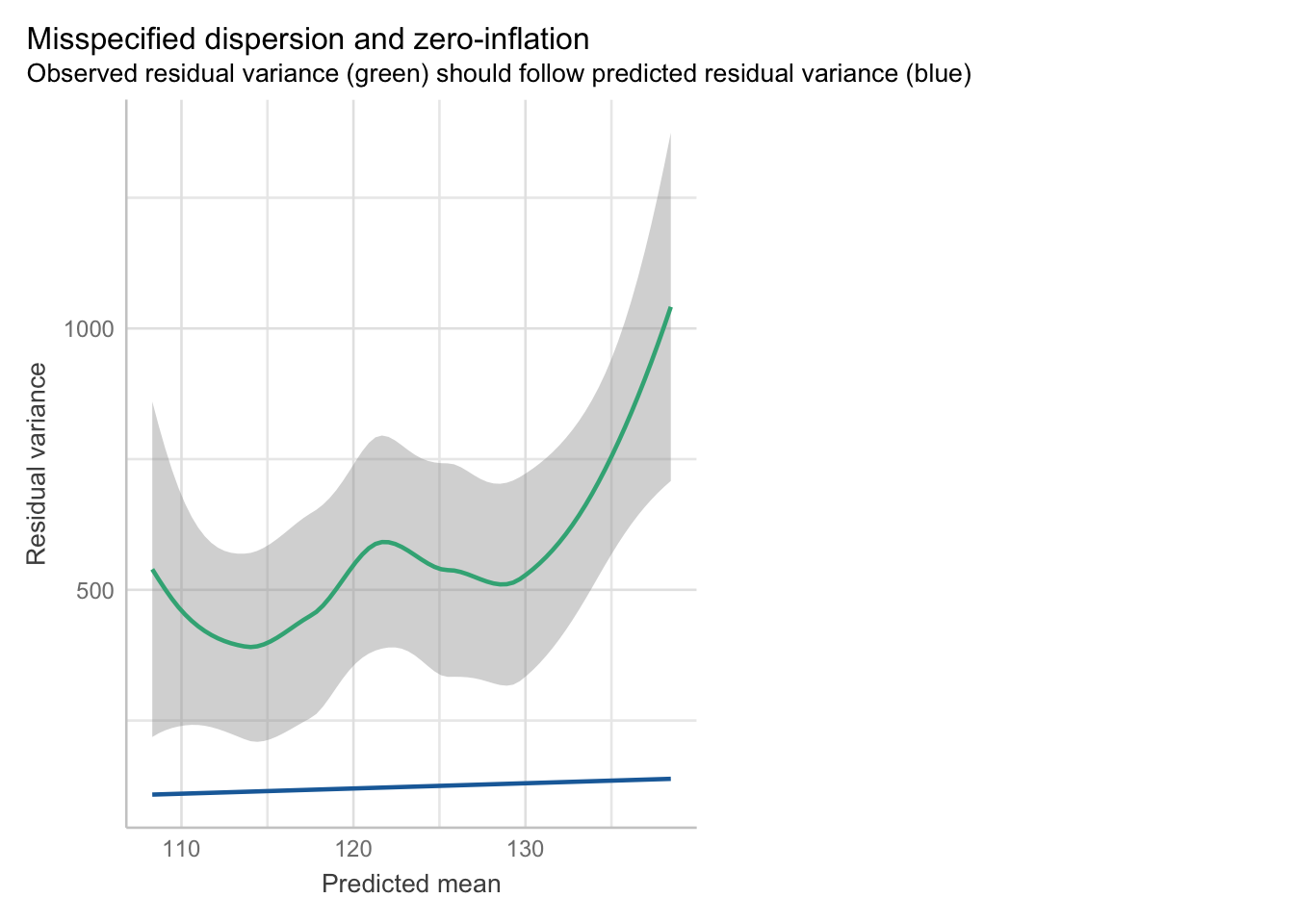

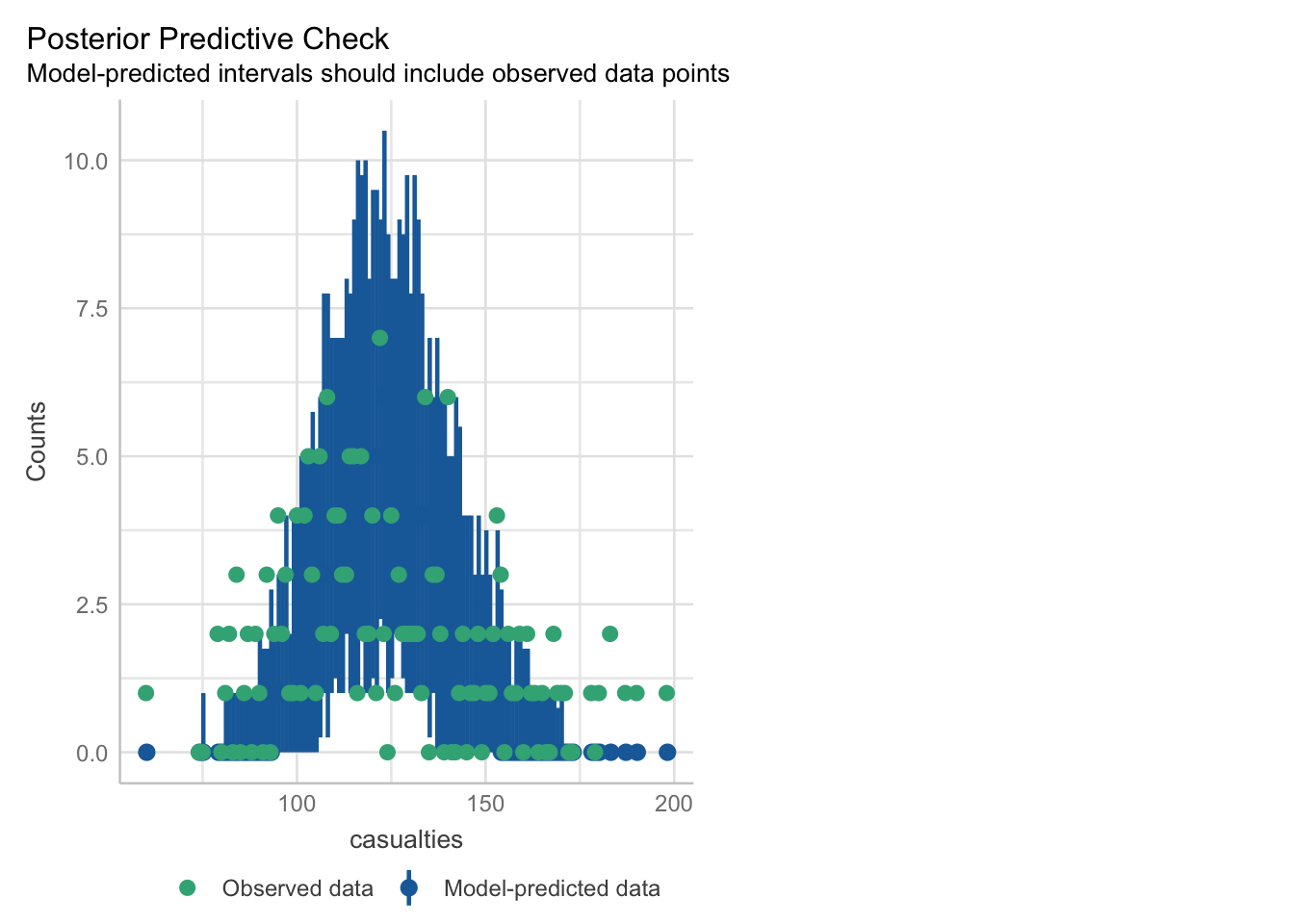

With the generic check_model function, we can visually assess a few things quite quickly.

check_model(glm_isl)Cannot simulate residuals for models of class `glm`. Please try

`check_model(..., residual_type = "normal")` instead.The posterior predictive check looks alright. The blue simulations seem to be following a pretty similar distribution to the actual data in green.

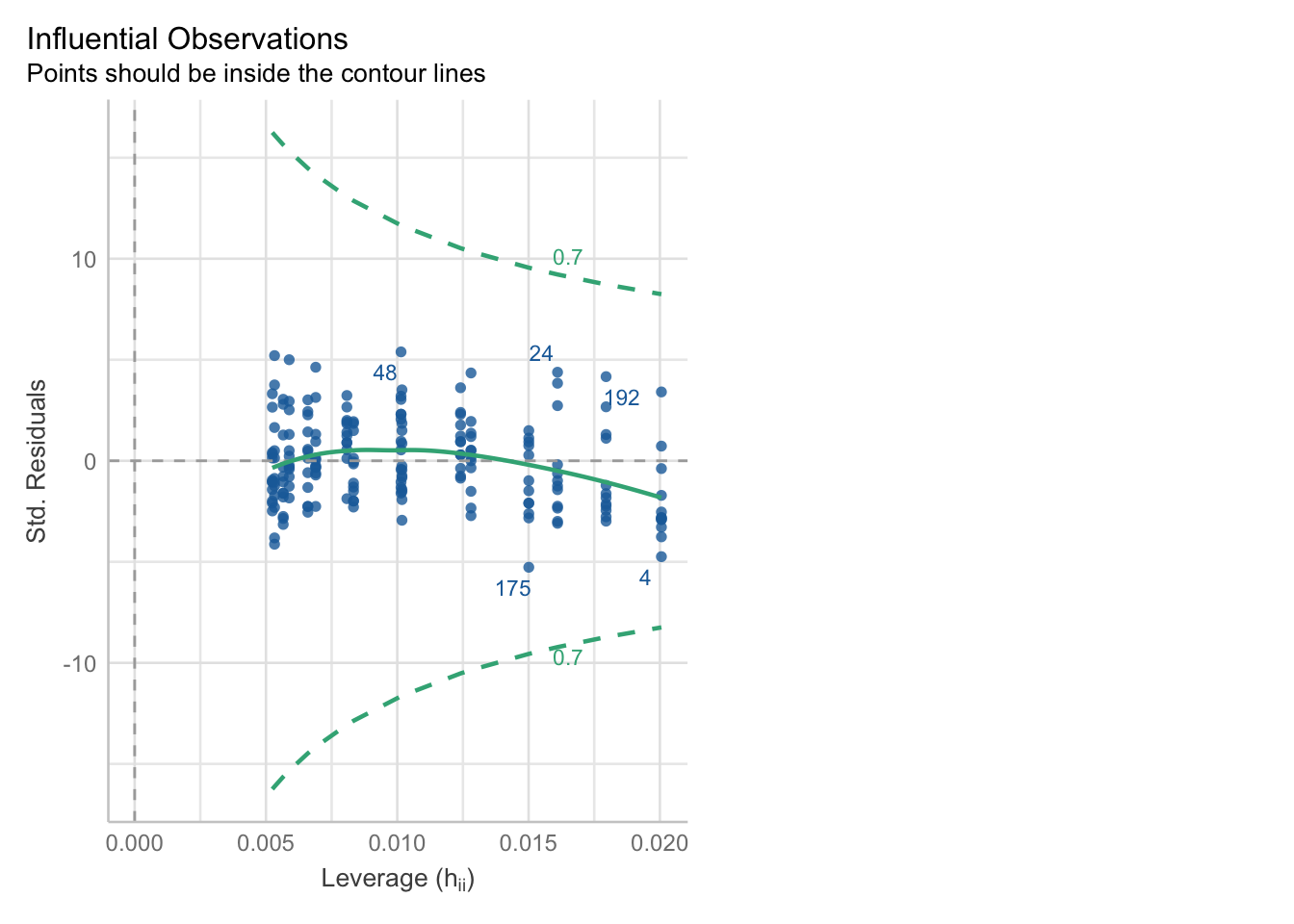

The leverage/Cook’s distance plot also isn’t identifying any data points that we need to be concerned about. We can follow up on that:

check_outliers(glm_isl, threshold = list('cook'=0.5))OK: No outliers detected.

- Based on the following method and threshold: cook (0.5).

- For variable: (Whole model)We can check for influential points:

influence = glm_isl.get_influence()

cooks = influence.cooks_distance[0]

influential_points = np.where(cooks > 0.5)[0]

print("Influential points:", influential_points)Influential points: []Influential points: There’s no evidence of any points with high Cook’s distances, so life is rosy on that front.

Independence: From the description of the dataset, it sounds plausible that each island is independent. Of course, if we later found out that some of the islands were clustered together into a bunch of archipelagos, that would definitely be cause for concern.

Distribution & link function: Again, from the description of the species variable, we can be confident that this really is a count variable. Whether or not Poisson regression with the log link function is correct, however, will depend on what happens when we look at the dispersion parameter.

12.7 Dispersion

12.7.1 What is dispersion?

Dispersion, in statistics, is a general term to describe the variability, scatter, or spread of a distribution. Variance is actually a type of dispersion.

In a normal distribution, the mean (average/central tendency) and the variance (dispersion) are independent of each other; we need both numbers, or parameters, to understand the shape of the distribution.

Other distributions, however, require different parameters to describe them in full. For a Poisson distribution - which we’ll learn more about when we talk about count data - we need just one parameter (\(\lambda\)) to describe the distribution, because the mean and variance are assumed to be the same.

In the context of a model, you can think about the dispersion as the degree to which the data are spread out around the model curve. A dispersion parameter of 1 means the data are spread out exactly as we expect; <1 is called underdispersion; and >1 is called overdispersion.

12.7.3 Assessing dispersion

The easiest way to check dispersion in a model is to calculate the ratio of the residual deviance to the residual degrees of freedom.

If we take a look at the model output, we can see the two quantities we care about - residual deviance and residual degrees of freedom:

summary(glm_isl)

Call:

glm(formula = species ~ area, family = "poisson", data = islands)

Coefficients:

Estimate Std. Error z value Pr(>|z|)

(Intercept) 4.241129 0.041322 102.64 <2e-16 ***

area 0.035613 0.001247 28.55 <2e-16 ***

---

Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

(Dispersion parameter for poisson family taken to be 1)

Null deviance: 856.899 on 34 degrees of freedom

Residual deviance: 30.437 on 33 degrees of freedom

AIC: 282.66

Number of Fisher Scoring iterations: 3print(glm_isl.summary()) Generalized Linear Model Regression Results

==============================================================================

Dep. Variable: species No. Observations: 35

Model: GLM Df Residuals: 33

Model Family: Poisson Df Model: 1

Link Function: Log Scale: 1.0000

Method: IRLS Log-Likelihood: -139.33

Date: Fri, 25 Jul 2025 Deviance: 30.437

Time: 08:28:47 Pearson chi2: 30.3

No. Iterations: 4 Pseudo R-squ. (CS): 1.000

Covariance Type: nonrobust

==============================================================================

coef std err z P>|z| [0.025 0.975]

------------------------------------------------------------------------------

Intercept 4.2411 0.041 102.636 0.000 4.160 4.322

area 0.0356 0.001 28.551 0.000 0.033 0.038

==============================================================================The residual deviance is 30.44, on 33 residual degrees of freedom. All we need to do is divide one by the other to get our dispersion parameter.

glm_isl$deviance/glm_isl$df.residual[1] 0.922334print(glm_isl.deviance/glm_isl.df_resid)0.9223340414458482The dispersion parameter here is 0.9223. That’s pretty good - not far off 1 at all.

But how can we check whether it is significantly different from 1?

Once again, the performance package comes in very helpful here.

The check_overdispersion function will give us both the dispersion parameter and a p-value (which is based on a chi-square test - like the goodness-of-fit ones you were running last chapter).

check_overdispersion(glm_isl)# Overdispersion test

dispersion ratio = 0.919

Pearson's Chi-Squared = 30.339

p-value = 0.6No overdispersion detected.Here, it confirms our suspicions; the dispersion parameter is both descriptively close to 1, and also not significantly different from 1.

You’ll notice that this function does give a slightly different value for the dispersion parameter, compared to when we calculated it manually above.

This is because it actually uses the sum of squared Pearson residuals instead of the residual deviance (a distinction which really isn’t worth worrying about). Broadly, the conclusion should be the same, so the difference doesn’t matter very much.

Well, you’ve actually already got the knowledge you need to do this, from the previous chapter on significance testing.

Specifically, the chi-squared goodness-of-fit test can be used to check whether the dispersion is within sensible limits.

pvalue = chi2.sf(glm_isl.deviance, glm_isl.df_resid)

print(pvalue)0.5953470127463268If our chi-squared goodness-of-fit test returns a large (insignificant) p-value, as it does here, that tells us that we don’t need to worry about the dispersion.

If our chi-squared goodness-of-fit test returned a small, significant p-value, this would tell us our model doesn’t fit the data well. And, since dispersion is all about the spread of points around the model, it makes sense that these two things are so closely related!

Great - we can proceed with the Poisson regression, since we don’t seem to have overdispersed or underdispersed data.

Next chapter we’ll look at what we would have done next, if we had run into problems with dispersion.

12.8 Assessing significance

By testing the dispersion with a chi-square test, we have already essentially checked the goodness-of-fit of this model - it’s good.

This leaves us with two things to check:

- Is the overall model better than the null model?

- Are any of the individual predictors significant?

12.8.1 Comparing against the null

We need to fit the null model, and then we can extract the null deviance and degrees of freedom to compare against our model.

glm_isl_null <- glm(species ~ 1,

data = islands, family = "poisson")

anova(glm_isl, glm_isl_null)Analysis of Deviance Table

Model 1: species ~ area

Model 2: species ~ 1

Resid. Df Resid. Dev Df Deviance Pr(>Chi)

1 33 30.44

2 34 856.90 -1 -826.46 < 2.2e-16 ***

---

Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1Since there’s only one predictor, we actually could achieve the same effect here by just computing an analysis of deviance table:

anova(glm_isl, test = "Chisq")Analysis of Deviance Table

Model: poisson, link: log

Response: species

Terms added sequentially (first to last)

Df Deviance Resid. Df Resid. Dev Pr(>Chi)

NULL 34 856.90

area 1 826.46 33 30.44 < 2.2e-16 ***

---

Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1First, we fit a null model:

model = smf.glm(formula = "species ~ 1",

family = sm.families.Poisson(),

data = islands)

glm_isl_null = model.fit()

glm_isl_null.df_residnp.int64(34)And now we can compare the two:

# Calculate the likelihood ratio (i.e. the chi-square value)

lrstat = -2*(glm_isl_null.llf - glm_isl.llf)

# Calculate the associated p-value

pvalue = chi2.sf(lrstat, glm_isl_null.df_resid - glm_isl.df_resid)

print(lrstat, pvalue)826.4623291533155 9.523357725017566e-182This gives a reported p-value extremely close to zero, which is rather small.

Therefore, this model is significant over the null, and species does appear to be predicted by area.

12.9 Exercises

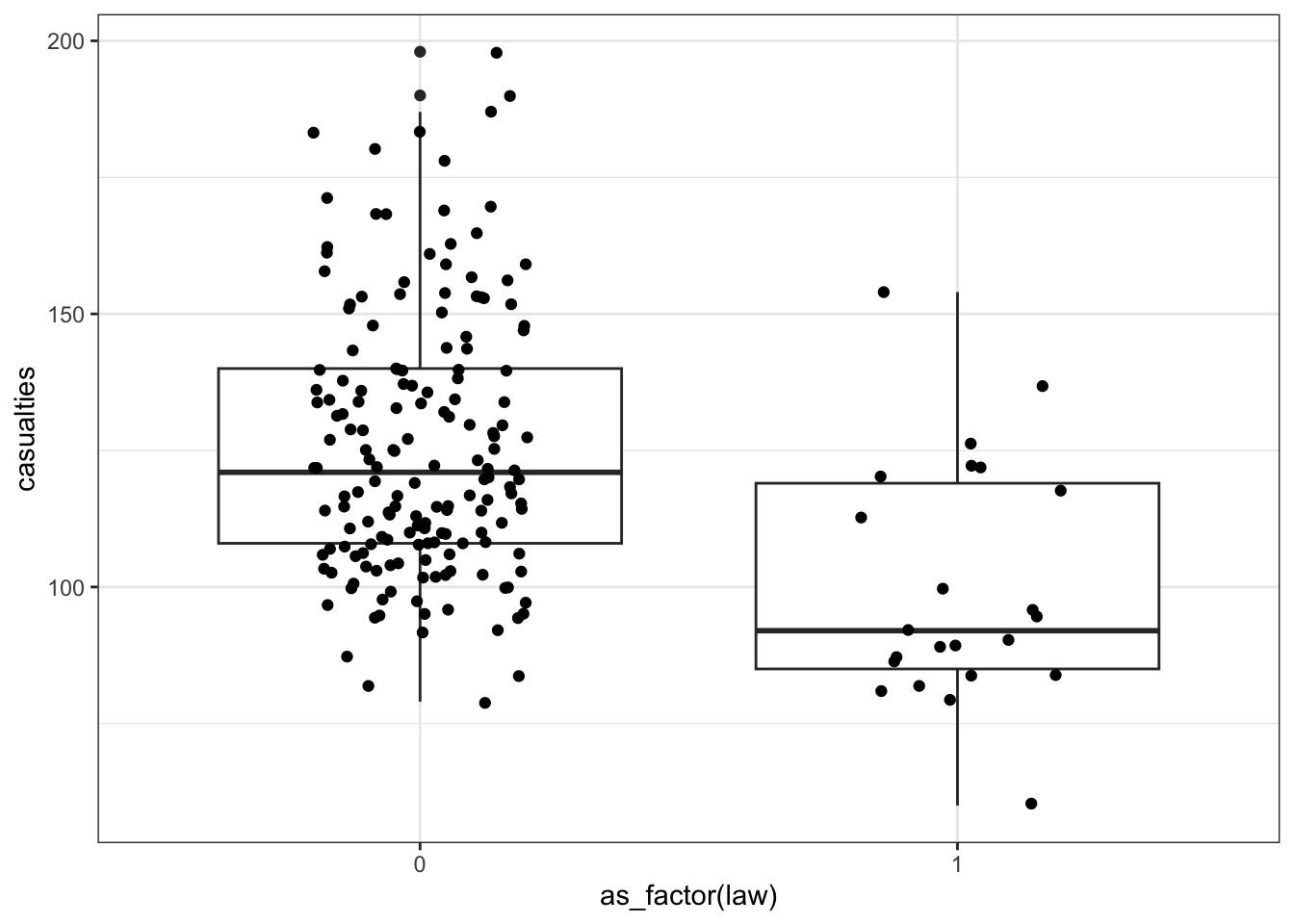

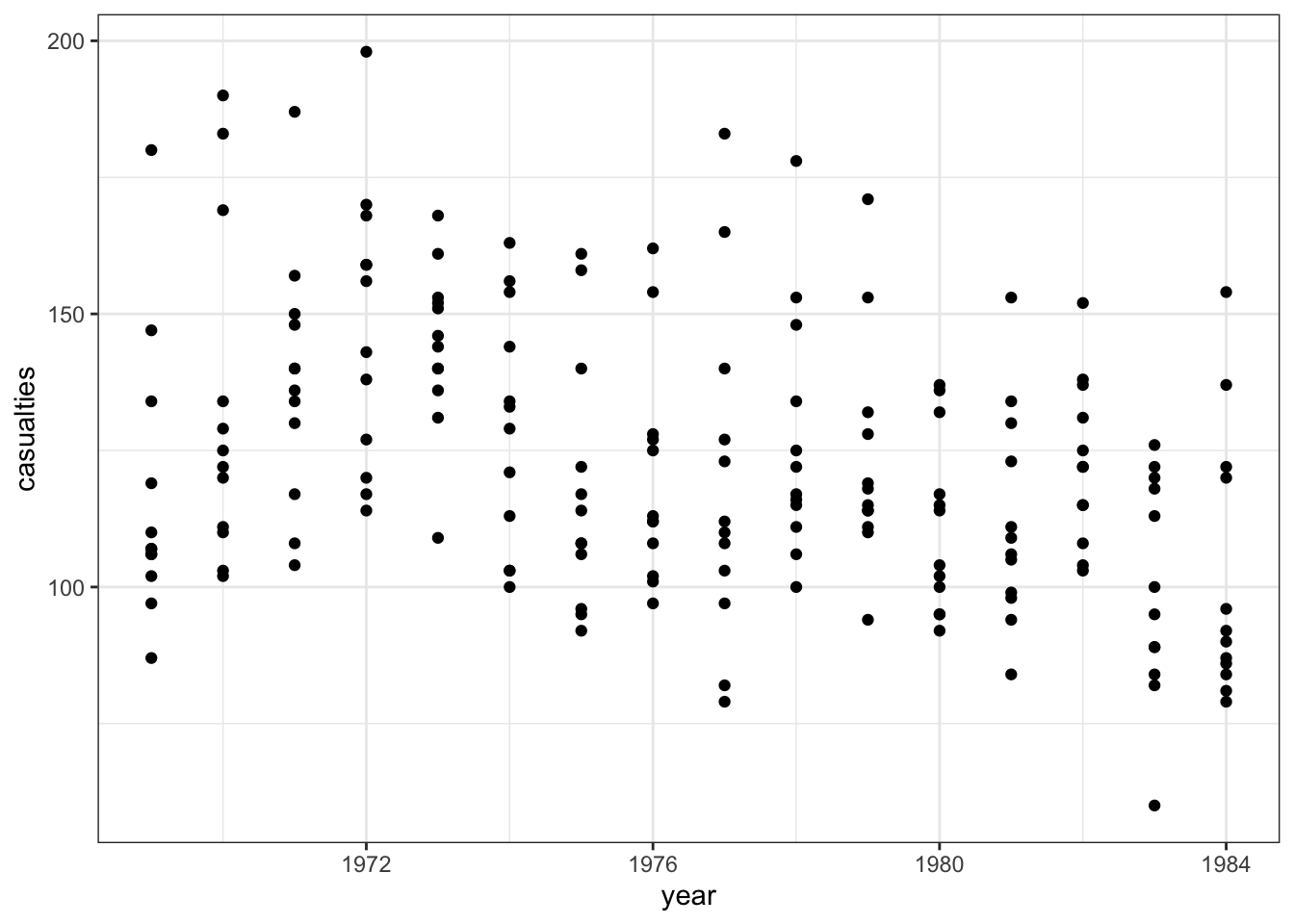

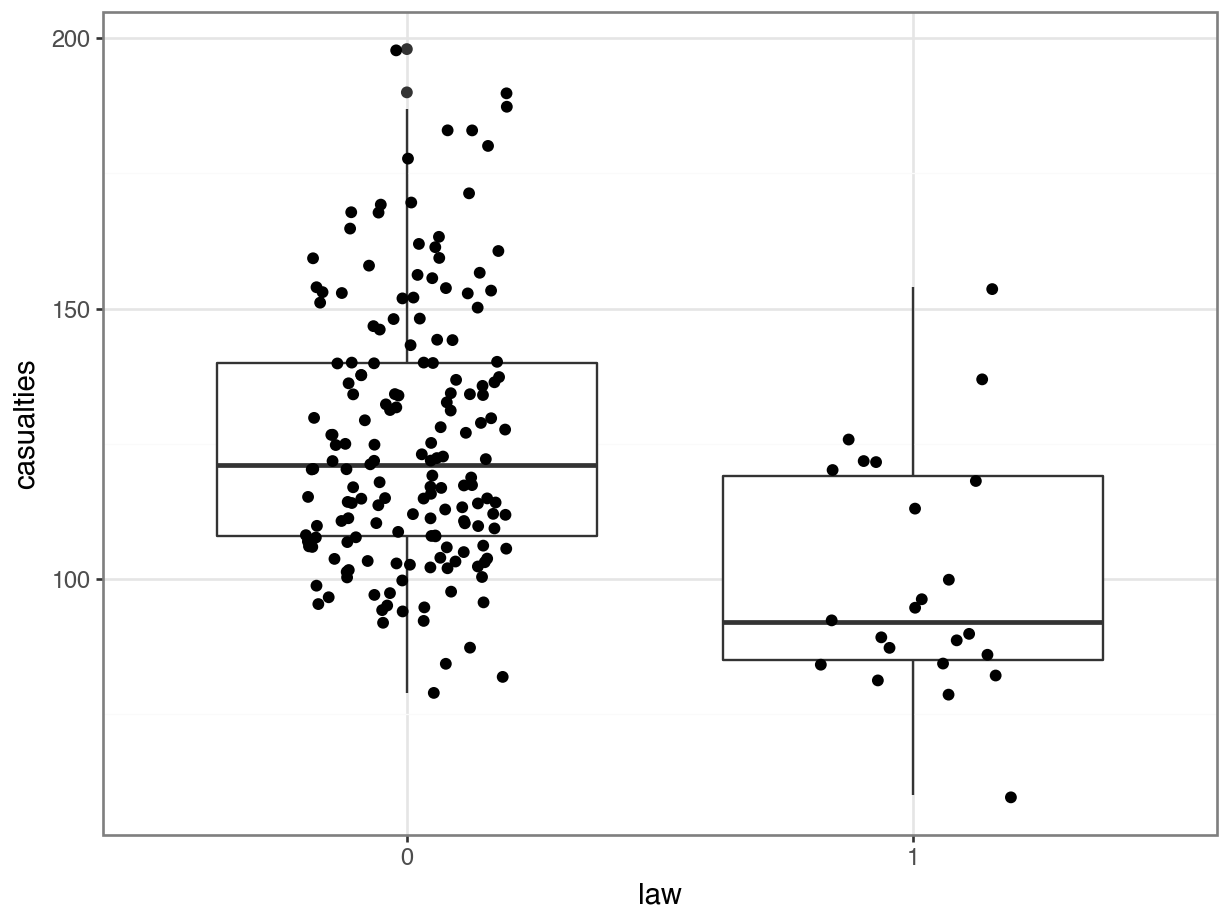

12.9.1 Seat belts

12.10 Summary

- Count data are bounded between 0 and \(\infty\), with integer increases

- This type of data can be modelled with a Poisson regression, using a log link function

- Poisson regression makes all of the same assumptions as logistic regression, plus an assumption about dispersion