health <- read_csv("data/health.csv")8 Nested random effects

Mixed effects models are also sometimes referred to as “hierarchical” or “multi-level” models. So far in these materials, we’ve only fitted two-level models, containing a single clustering variable or effect. Sometimes, however, there are random effects nested inside others.

8.1 What is a nested random effect?

Once we are at the stage of having multiple variables in our sample that create clusters or groups, it becomes relevant to consider the relationship that those clustering variables have to one another, to ensure that we’re fitting a model that properly represents our experimental design.

We describe factor B as being nested inside factor A, if each group/category of B only occurs within one group/category of factor A.

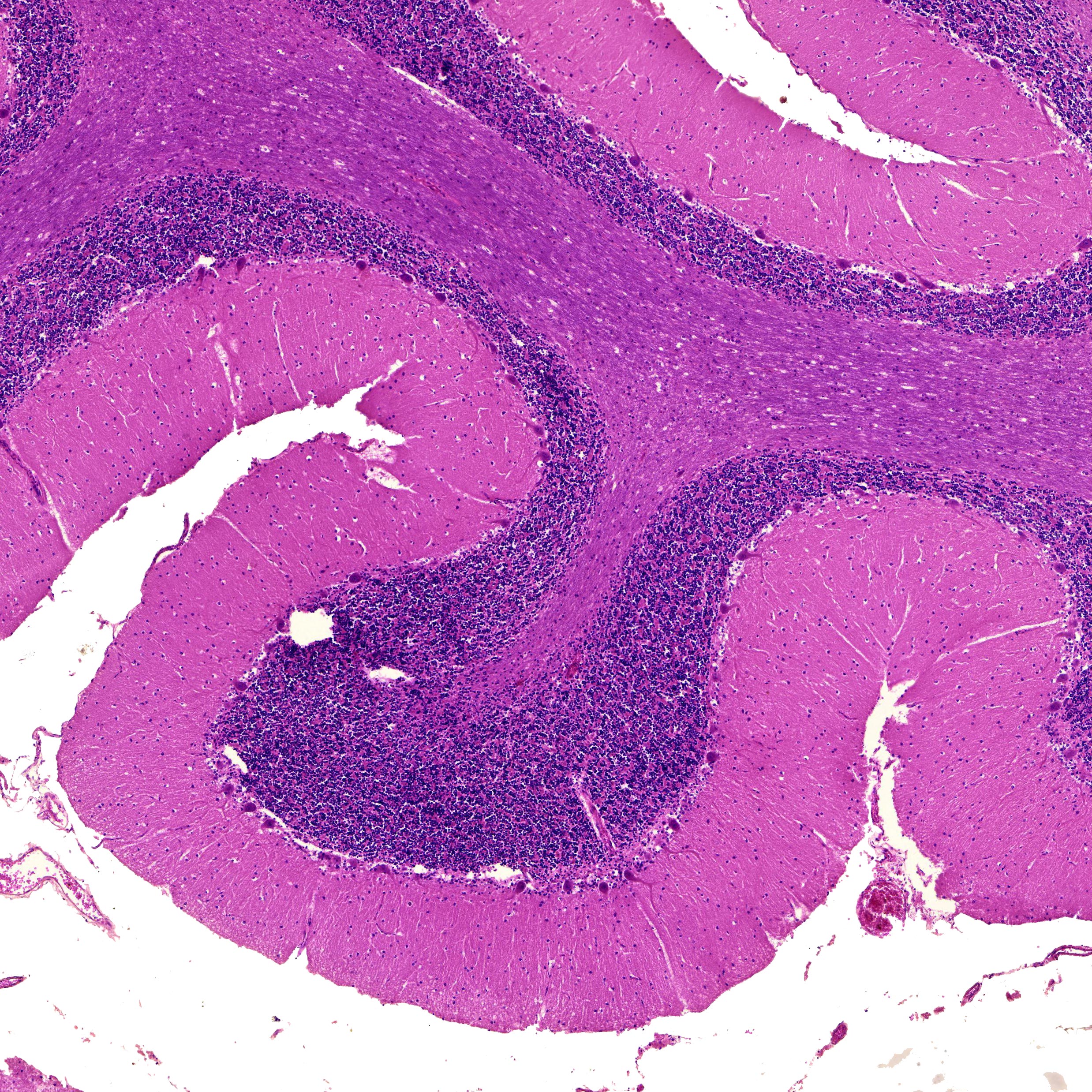

For instance, data on academic performance may be structured as children grouped within classrooms, with classrooms grouped within schools. A histology experiment might measure individual cells grouped within slices, with slices grouped within larger samples. Air pollution data might be measured at observation stations grouped within a particular city, with multiple cities per country.

8.2 Fitting a three-level model

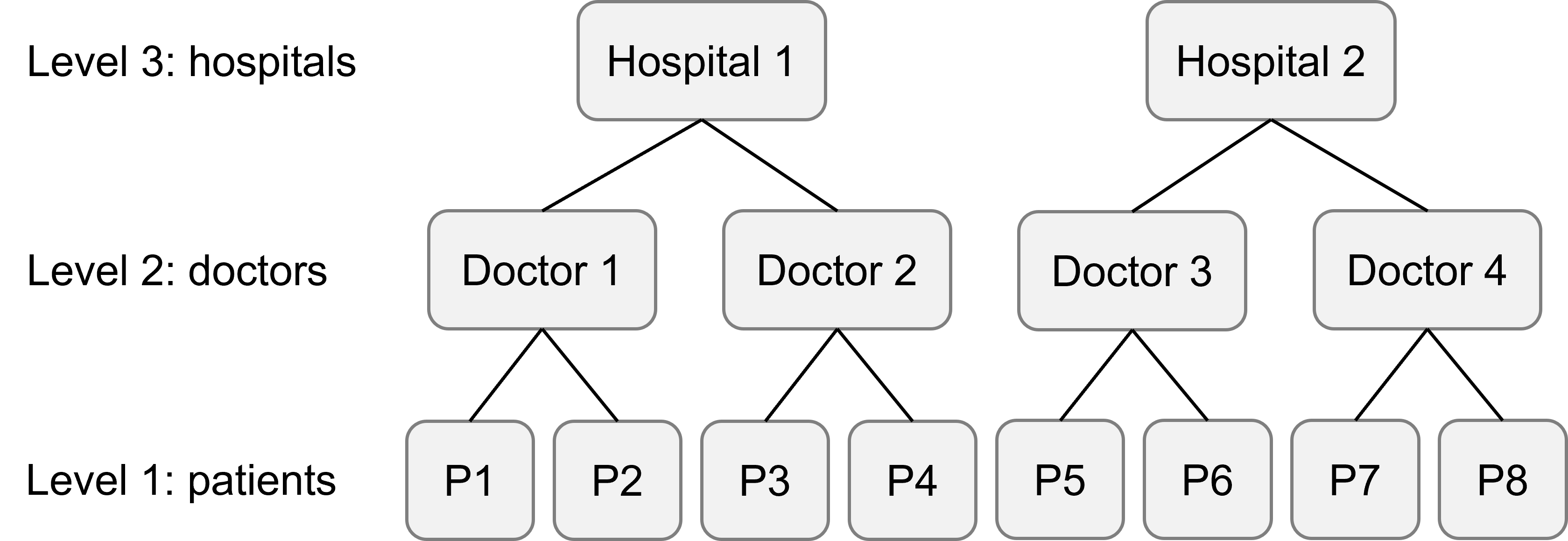

Another classic example of nested random effects that would prompt a three-level model can be found in a clinical setting: within each hospital, there are multiple doctors, each of whom treats multiple patients. (Here, we will assume that each doctor only works at a single hospital, and that each patient is only treated by a single doctor.)

Here’s an image of how that experimental design looks. Level 1 is the patients, level 2 is the doctors, and level 3 is the hospitals.

This is, of course, a simplified version - we would hope that there are more than two hospitals, four doctors and eight patients in the full sample!

We have a single fixed predictor of interest treatment (for which there are two possible treatments, A or B), and some continuous response variable outcome.

What model would we fit to these data? Well, it gets a touch more complex now that we have multiple levels in this dataset.

8.2.1 A three-level random intercepts model

Let’s put random slopes to one side, since they take a bit more thought, and think about how we would fit just some random intercepts for now.

It would be appropriate to fit two sets of random intercepts in this model, one for each set of clusters we have. In this case, that means a set of intercepts for the doctors, and a set of intercepts for the hospital.

lme_health_intercepts <- lmer(outcome ~ treatment + (1|doctor) + (1|hospital),

data = health)

summary(lme_health_intercepts)Linear mixed model fit by REML. t-tests use Satterthwaite's method [

lmerModLmerTest]

Formula: outcome ~ treatment + (1 | doctor) + (1 | hospital)

Data: health

REML criterion at convergence: 1848.5

Scaled residuals:

Min 1Q Median 3Q Max

-2.56989 -0.66086 0.06162 0.67602 2.84690

Random effects:

Groups Name Variance Std.Dev.

doctor (Intercept) 2.1416 1.4634

hospital (Intercept) 0.1688 0.4108

Residual 26.3425 5.1325

Number of obs: 300, groups: doctor, 30; hospital, 5

Fixed effects:

Estimate Std. Error df t value Pr(>|t|)

(Intercept) 26.6155 0.5299 8.4431 50.23 9.34e-12 ***

treatmentsurgery 6.2396 0.5926 269.0000 10.53 < 2e-16 ***

---

Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

Correlation of Fixed Effects:

(Intr)

trtmntsrgry -0.559This produces a model with two random effects, namely, two sets of random intercepts.

8.2.2 Where to include random slopes?

Deciding what level(s) we want to fit random slopes at, requires us to think about what level of our hierarchy we’ve applied our Treatment variable at. We’ll get to that in a moment.

Predictor varies at level 1

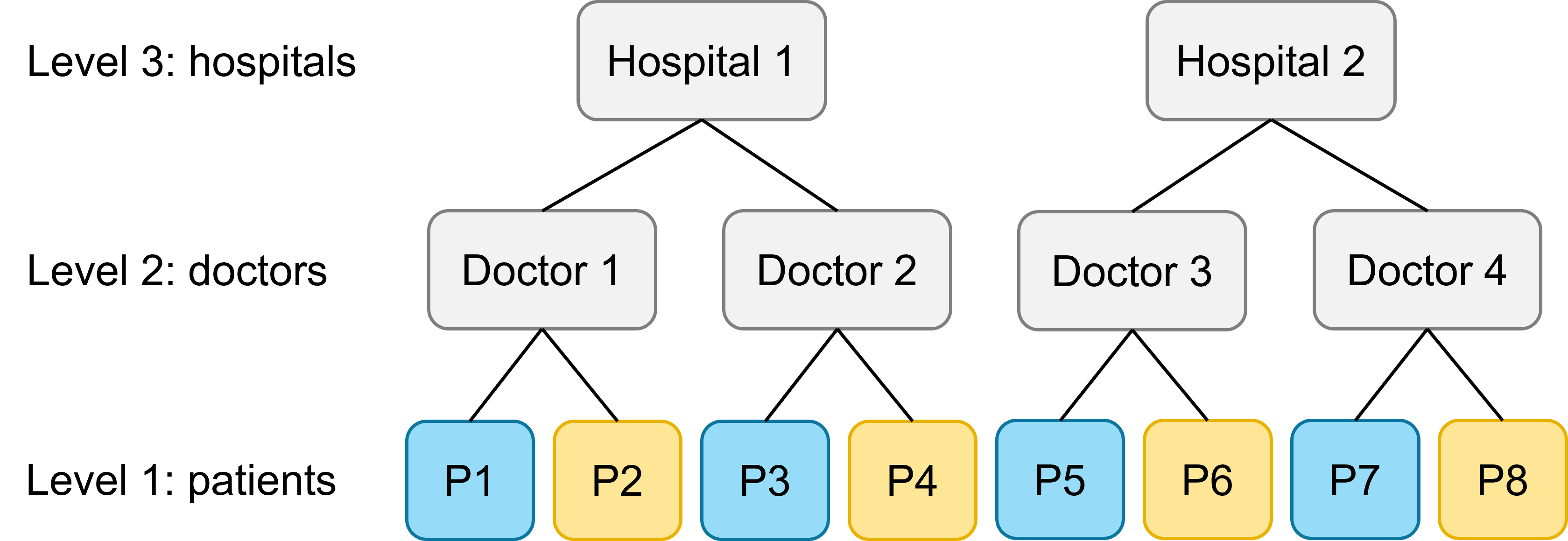

Let’s start by imagining the following scenario: the Treatment variable is varying at our lowest level. Each patient receives only one type of treatment (A or B), but both treatment types are represented “within” each doctor and within each hospital:

As a result, it would be inappropriate to ask lme4 to fit random slopes for the treatment variable at the patient level. Instead, the “full” model (i.e., a model containing all of the possible fixed and random effects) would be the following:

lme_health_slopes <- lmer(outcome ~ treatment + (1 + treatment|doctor) +

(1 + treatment|hospital), data = health)

summary(lme_health_slopes)Linear mixed model fit by REML. t-tests use Satterthwaite's method [

lmerModLmerTest]

Formula: outcome ~ treatment + (1 + treatment | doctor) + (1 + treatment |

hospital)

Data: health

REML criterion at convergence: 1834.1

Scaled residuals:

Min 1Q Median 3Q Max

-2.38341 -0.60702 -0.02763 0.71956 2.88951

Random effects:

Groups Name Variance Std.Dev. Corr

doctor (Intercept) 7.8846 2.8080

treatmentsurgery 7.3157 2.7047 -0.96

hospital (Intercept) 0.5085 0.7131

treatmentsurgery 3.5595 1.8867 -0.91

Residual 23.5775 4.8557

Number of obs: 300, groups: doctor, 30; hospital, 5

Fixed effects:

Estimate Std. Error df t value Pr(>|t|)

(Intercept) 26.6155 0.7223 4.0083 36.849 3.17e-06 ***

treatmentsurgery 6.2396 1.1270 3.9992 5.537 0.00521 **

---

Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

Correlation of Fixed Effects:

(Intr)

trtmntsrgry -0.794This produces a model with four sets of random effects: two sets of random intercepts, and two sets of random slopes.

Predictor varies at level 2

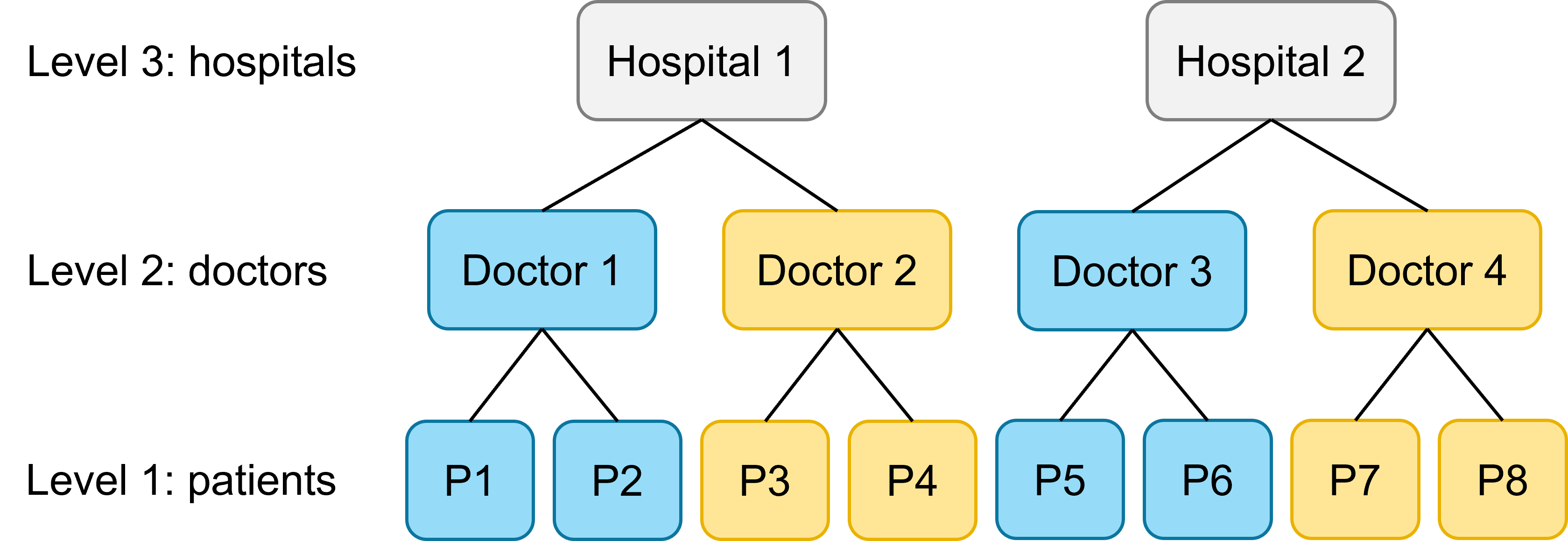

Let’s now imagine a (perhaps more realistic) scenario. Each doctor is in fact a specialist in a certain type of treatment, but cannot deliver both. For this, we will need to read in the second version of our dataset.

health2 <- read_csv("data/health2.csv")If you look closely at the dataset, you can see that treatment does not vary within doctor; instead, it only varies within hospital.

This means we cannot fit random slopes for treatment at the second level any more. We have to drop our random slopes for treatment by doctor, like this:

lme_health_slopes2 <- lmer(outcome ~ treatment + (1|doctor) +

(1 + treatment|hospital), data = health2)boundary (singular) fit: see help('isSingular')summary(lme_health_slopes2)Linear mixed model fit by REML. t-tests use Satterthwaite's method [

lmerModLmerTest]

Formula: outcome ~ treatment + (1 | doctor) + (1 + treatment | hospital)

Data: health2

REML criterion at convergence: 1845.7

Scaled residuals:

Min 1Q Median 3Q Max

-2.46342 -0.69900 -0.05043 0.69559 3.09601

Random effects:

Groups Name Variance Std.Dev. Corr

doctor (Intercept) 6.318 2.514

hospital (Intercept) 1.372 1.171

treatmentsurgery 3.635 1.907 -1.00

Residual 24.417 4.941

Number of obs: 300, groups: doctor, 30; hospital, 5

Fixed effects:

Estimate Std. Error df t value Pr(>|t|)

(Intercept) 25.7019 0.9265 4.3745 27.740 4.38e-06 ***

treatmentsurgery 4.5061 1.3766 4.0456 3.273 0.0302 *

---

Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

Correlation of Fixed Effects:

(Intr)

trtmntsrgry -0.808

optimizer (nloptwrap) convergence code: 0 (OK)

boundary (singular) fit: see help('isSingular')This gives us three sets of random effects, as opposed to four.

Predictor varies at level 3

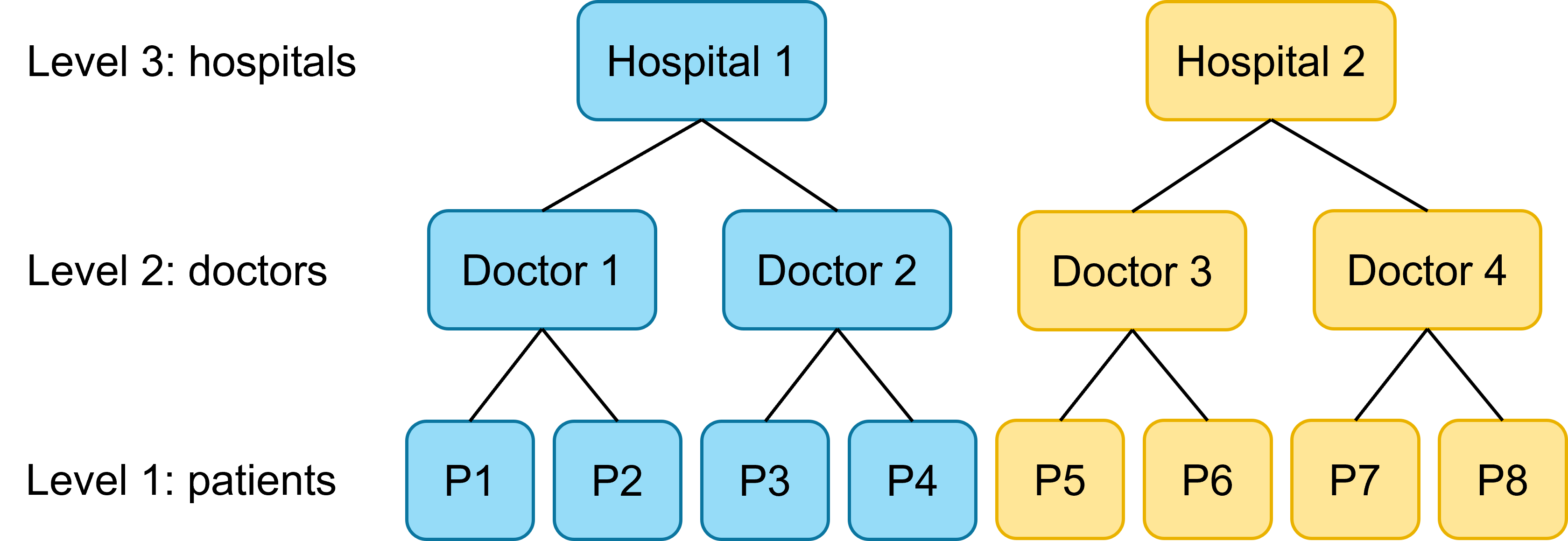

Finally, let’s imagine a scenario where each hospital is only equipped to offer one type of treatment (hopefully, not a realistic scenario!). Here, all doctors and patients within each hospital use exactly the same method.

At this stage, we can no longer include random slopes for the treatment predictor anywhere in our model. Each hospital, doctor and patient only experiences one of the two treatments, not both, so we have no variation between the treatments to estimate at any of these levels.

So, here, we would go back to our random intercepts only model.

8.3 Implicit vs explicit nesting

The different health datasets that have been explored above all have an important thing in common: the variables have been implicitly nested. Each new hospital, doctor and patient is given a unique identifier, to make it clear that doctors do not reoccur between hospitals, and patients do not reoccur between doctors.

In other words, all the information about the nesting is captured implicitly in the way that the data are coded.

However, you might sometimes be working with a dataset that has not been coded this way. So how do you deal with those situations? You have a few options:

- Recode your dataset so it is implicitly nested

- Use explicit nesting in your

lme4model formula - Use the

A/Bsyntax in yourlme4model formula

8.3.1 The Pastes dataset

We’ll use another internal lme4 dataset, the Pastes dataset, to show you what these three options look like in practice.

data("Pastes")

head(Pastes) strength batch cask sample

1 62.8 A a A:a

2 62.6 A a A:a

3 60.1 A b A:b

4 62.3 A b A:b

5 62.7 A c A:c

6 63.1 A c A:cThis dataset is about measuring the strength of a chemical paste product, which is delivered in batches, each batch consisting of several casks. From ten random deliveries of the product, three casks were chosen at random (for a total of 30 casks). A sample was taken from each cask; from each sample, there were two assays, for a total of 60 assays.

There are four variables:

strength, paste strength, a continuous response variable; measured for each assaybatch, delivery batch from which the sample was chosen (10 groups, A to J)cask, cask within the deliver batch from which the sample was chosen (3 groups, a to c)sample, batch & cask combination (30 groups, A:a to J:c)

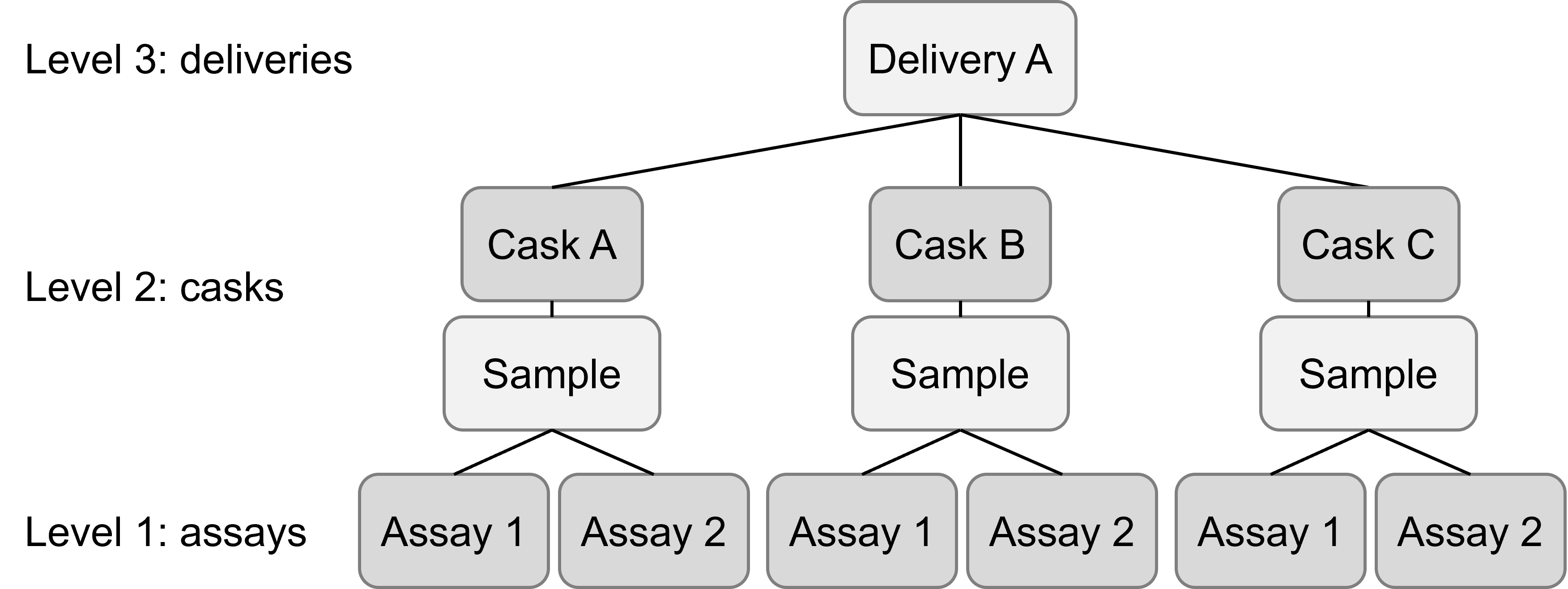

The experimental design, when drawn out, looks like this:

At first glance, this might look as if it’s a four-level model: assays within samples within casks within deliveries. However, that’s a bit of a overcomplication. There is only one sample collected per cask, meaning that we can really just think about assays being nested within casks directly.

The aim of this experiment was simply to assess the average strength of the chemical paste, adjusting for variation across batches and casks. Therefore, there are no fixed effects in the model - instead, we simply write 1 for the fixed portion of our model. We also won’t have any random slopes, only random intercepts.

If we follow the same procedure we did above for the health example, we might try something like this:

lme_paste <- lmer(strength ~ 1 + (1|batch) + (1|cask), data = Pastes)

summary(lme_paste)Linear mixed model fit by REML. t-tests use Satterthwaite's method [

lmerModLmerTest]

Formula: strength ~ 1 + (1 | batch) + (1 | cask)

Data: Pastes

REML criterion at convergence: 301.5

Scaled residuals:

Min 1Q Median 3Q Max

-1.49025 -0.90096 -0.01247 0.62911 1.82246

Random effects:

Groups Name Variance Std.Dev.

batch (Intercept) 3.3639 1.8341

cask (Intercept) 0.1487 0.3856

Residual 7.3060 2.7030

Number of obs: 60, groups: batch, 10; cask, 3

Fixed effects:

Estimate Std. Error df t value Pr(>|t|)

(Intercept) 60.0533 0.7125 6.7290 84.28 1.99e-11 ***

---

Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1Something is wrong with this model. To spot it, we have to look carefully at the bottom of the random effects section, where it says Number of obs (short for observations).

lme4 has correctly identified that there are 10 delivery batches, and has fitted a set of 10 random intercepts for those batches - all good so far. However, R believes that we only have 3 casks, because the cask variable is implicitly nested, and so has only fitted a set of 3 random intercepts for that variable.

But this isn’t what we want. There is no link between cask A in batch A, and cask A in batch D - they have no reason to be more similar to each other than they are to other casks. We actually have 30 unique casks, and would like for each of them to have its own random intercept.

8.3.2 Recoding for implicit nesting

As shown above, the formula strength ~ 1 + (1|batch) + (1|cask) does not produce the model we want, because we don’t have implicit coding in the cask variable.

So, let’s create a new variable that gives unique values to each of the casks in our dataset. We’ll do this using the mutate function and the paste() function to create a unique ID for each cask.

Pastes <- Pastes %>% mutate(unique_cask = paste(batch, cask, sep = "_"))

head(Pastes) strength batch cask sample unique_cask

1 62.8 A a A:a A_a

2 62.6 A a A:a A_a

3 60.1 A b A:b A_b

4 62.3 A b A:b A_b

5 62.7 A c A:c A_c

6 63.1 A c A:c A_cThis generates 30 unique IDs, one for each of our unique casks. (We then have two observations of strength for each unique_cask.)

Now, we can go ahead and fit our desired model:

lme_paste_implicit <- lmer(strength ~ 1 + (1|batch) + (1|unique_cask),

data = Pastes)

summary(lme_paste_implicit)Linear mixed model fit by REML. t-tests use Satterthwaite's method [

lmerModLmerTest]

Formula: strength ~ 1 + (1 | batch) + (1 | unique_cask)

Data: Pastes

REML criterion at convergence: 247

Scaled residuals:

Min 1Q Median 3Q Max

-1.4798 -0.5156 0.0095 0.4720 1.3897

Random effects:

Groups Name Variance Std.Dev.

unique_cask (Intercept) 8.434 2.9041

batch (Intercept) 1.657 1.2874

Residual 0.678 0.8234

Number of obs: 60, groups: unique_cask, 30; batch, 10

Fixed effects:

Estimate Std. Error df t value Pr(>|t|)

(Intercept) 60.0533 0.6769 9.0000 88.72 1.49e-14 ***

---

Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1No error message this time, and it has correctly identified that there are 30 unique casks, from 10 different batches. We’ve solved the problem!

Incidentally, and which you may have already noticed, the recoding that we did above also perfectly replicates the existing sample variable. This means we would get an identical result if we fitted the model strength ~ 1 + (1|batch) + (1|sample) instead.

8.3.3 Fitting a model with explicit nesting

If we’re in a situation like the above where we don’t have nice, neat implicitly coded variables, but we don’t really want to spend loads of time recoding a bunch of variables, we can instead fit our model using explicit nesting in lme4.

That essentially means combining the recoding and model fitting steps, so that you don’t have to save a new variable.

For the Pastes dataset, it would look like this:

lme_paste_explicit <- lmer(strength ~ 1 + (1|batch) + (1|batch:cask), data = Pastes)

summary(lme_paste_explicit)Linear mixed model fit by REML. t-tests use Satterthwaite's method [

lmerModLmerTest]

Formula: strength ~ 1 + (1 | batch) + (1 | batch:cask)

Data: Pastes

REML criterion at convergence: 247

Scaled residuals:

Min 1Q Median 3Q Max

-1.4798 -0.5156 0.0095 0.4720 1.3897

Random effects:

Groups Name Variance Std.Dev.

batch:cask (Intercept) 8.434 2.9041

batch (Intercept) 1.657 1.2874

Residual 0.678 0.8234

Number of obs: 60, groups: batch:cask, 30; batch, 10

Fixed effects:

Estimate Std. Error df t value Pr(>|t|)

(Intercept) 60.0533 0.6769 9.0000 88.72 1.49e-14 ***

---

Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1Notice that the output for this model and for the one right above are the same - it’s because they are exactly equivalent to each other! We used batch:cask to create unique_cask earlier, so we’ve just directly inserted that code into our formula.

8.3.4 An alternative approach: the A/B syntax

There is another way to deal with nested random effects that haven’t been implicitly coded into the dataset. It’s not necessarily the way that we would recommend - recoding your variables and/or using explicit nesting has far less potential to trip you up and go wrong - but we’ll introduce it briefly here, since it’s something you’re likely to see if you start working with mixed models a lot.

We could fit the same model to the Pastes dataset, and achieve a set of 30 intercepts for cask and 10 for batch, without making use of the sample variable or using the A:B notation.

It works like this: when random effect B is nested inside random effect A, you can simply write A/B on the right hand side of the |.

lme_paste_shorthand <- lmer(strength ~ 1 + (1|batch/cask), data = Pastes)

summary(lme_paste_shorthand)Linear mixed model fit by REML. t-tests use Satterthwaite's method [

lmerModLmerTest]

Formula: strength ~ 1 + (1 | batch/cask)

Data: Pastes

REML criterion at convergence: 247

Scaled residuals:

Min 1Q Median 3Q Max

-1.4798 -0.5156 0.0095 0.4720 1.3897

Random effects:

Groups Name Variance Std.Dev.

cask:batch (Intercept) 8.434 2.9041

batch (Intercept) 1.657 1.2874

Residual 0.678 0.8234

Number of obs: 60, groups: cask:batch, 30; batch, 10

Fixed effects:

Estimate Std. Error df t value Pr(>|t|)

(Intercept) 60.0533 0.6769 9.0000 88.72 1.49e-14 ***

---

Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1This gives you an identical model output to both of the other two models we’ve tried. That’s because (1|batch/cask) is actually shorthand for (1|batch) + (1|batch:cask), and in this dataset as we’ve seen above, batch:cask is the same as sample and unique_cask. In other words, we’ve fitted exactly the same model each time.

8.3.5 Which option is best?

Overall: implicit coding of your dataset is best, and it’s best to do this implicit coding during data collection itself. It prevents mistakes, because the experimental design is clear from the data structure. It’s also the easiest and most flexible in terms of coding in lme4.

In the absence of an implicitly coded dataset, we strongly recommend sticking with explicit coding rather than the shorthand syntax - yes, it requires more typing, but it’s somewhat safer.

And, no matter which method you choose, always check the model output to see that the number of groups per clustering variables matches what you expect to see.

8.4 Exercises

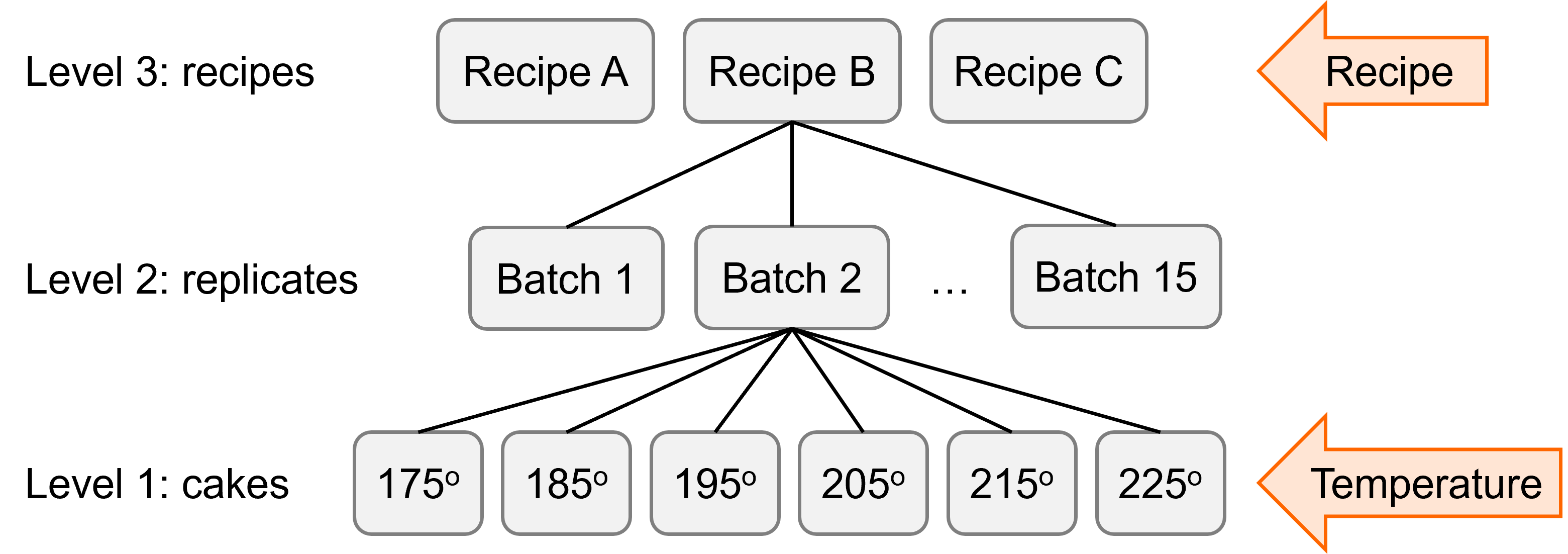

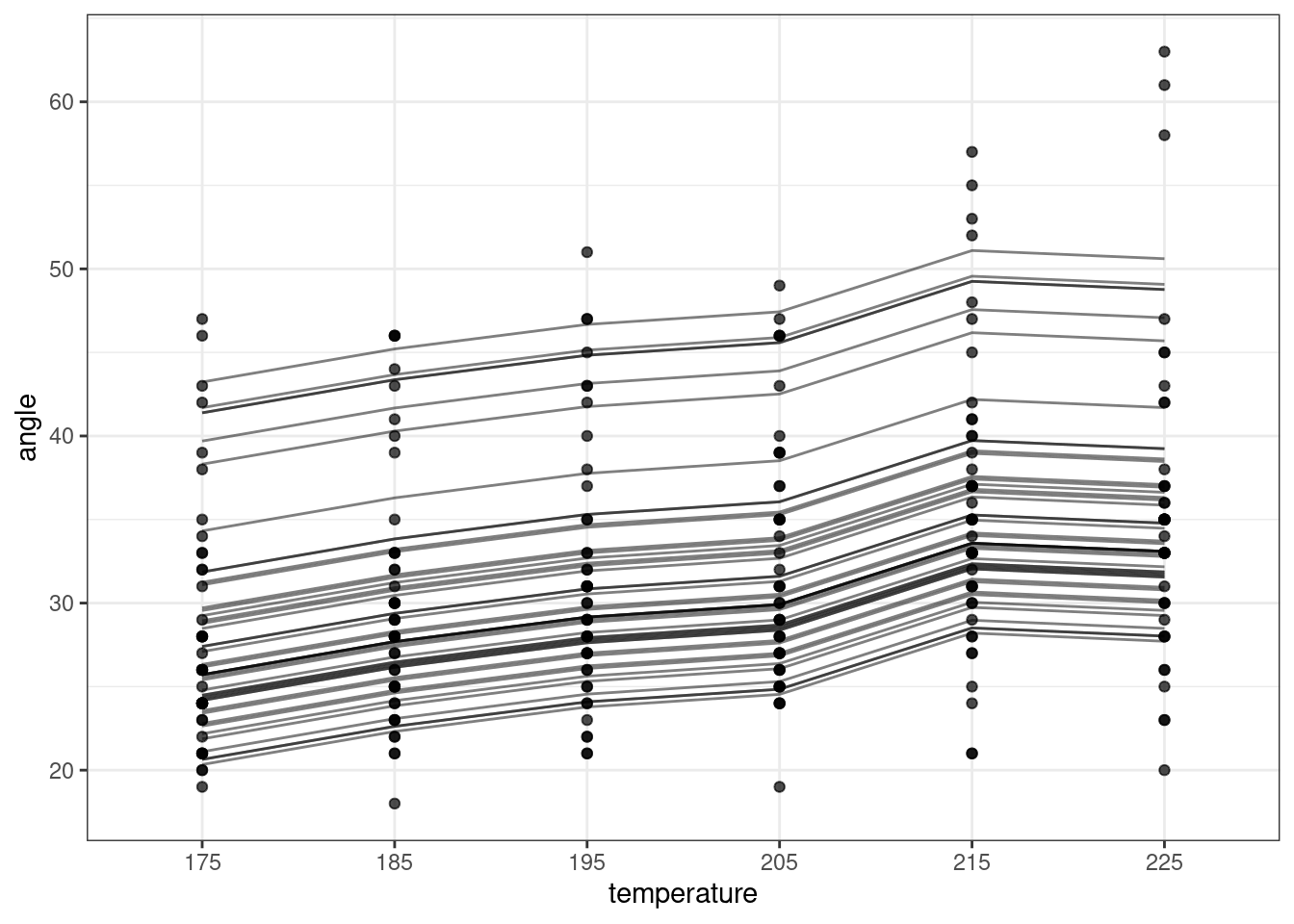

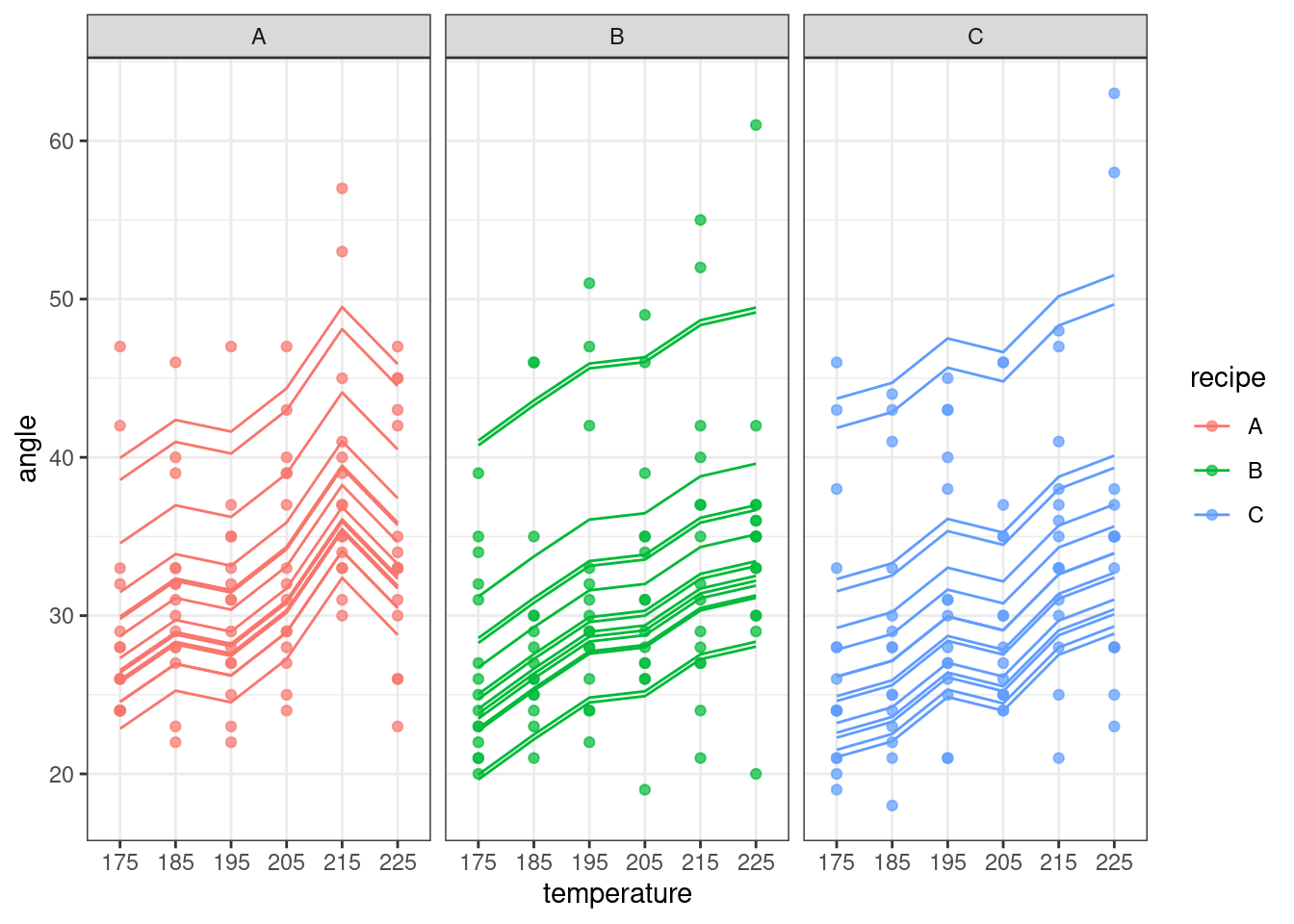

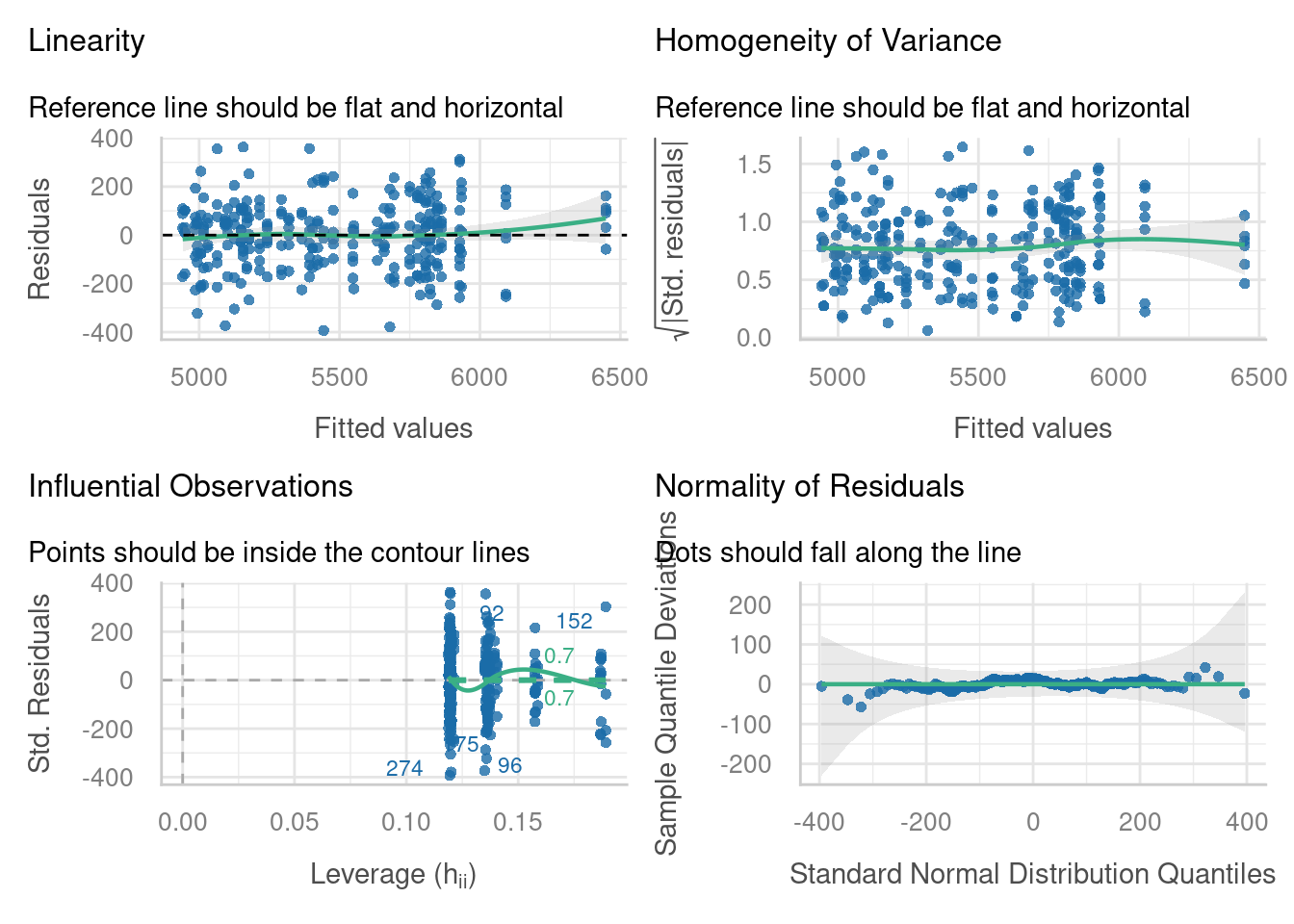

8.4.1 Cake

For more information on the very best way to bake a chocolate cake (and a lovely demonstration at the end about the dangers of extrapolating from a linear model), this blog post is a nice source. It’s written by a data scientist who was so curious about the quirky cake dataset that he contacted Iowa State University, who helped him unearth Cook’s original thesis.

8.4.2 Parallel fibres

8.5 Summary

Sometimes, a dataset contains multiple clustering variables. When one of those clustering variables is nested inside the other, we can model this effectively by estimating random effects at multiple levels.

Adding additional levels can create some complications, e.g., determining which level of the dataset your predictor variables are varying at. But it can also allow us to deal with real-life hierarchical data structures, which are common in research.

- Random effect B is nested inside random effect A, if each category of B occurs uniquely within only one category of A

- It’s important to figure out what level of the hierarchy or model a predictor variable is varying at, to determine where random slopes are appropriate

- Nested random effects can be implicitly or explicitly coded in a dataframe, which determines how the model should be specified in

lme4